Howdy, Stranger!

It looks like you're new here. If you want to get involved, click one of these buttons!

Categories

Launch: LLMbench - a tool to undertake comparative annotated analysis of LLM output

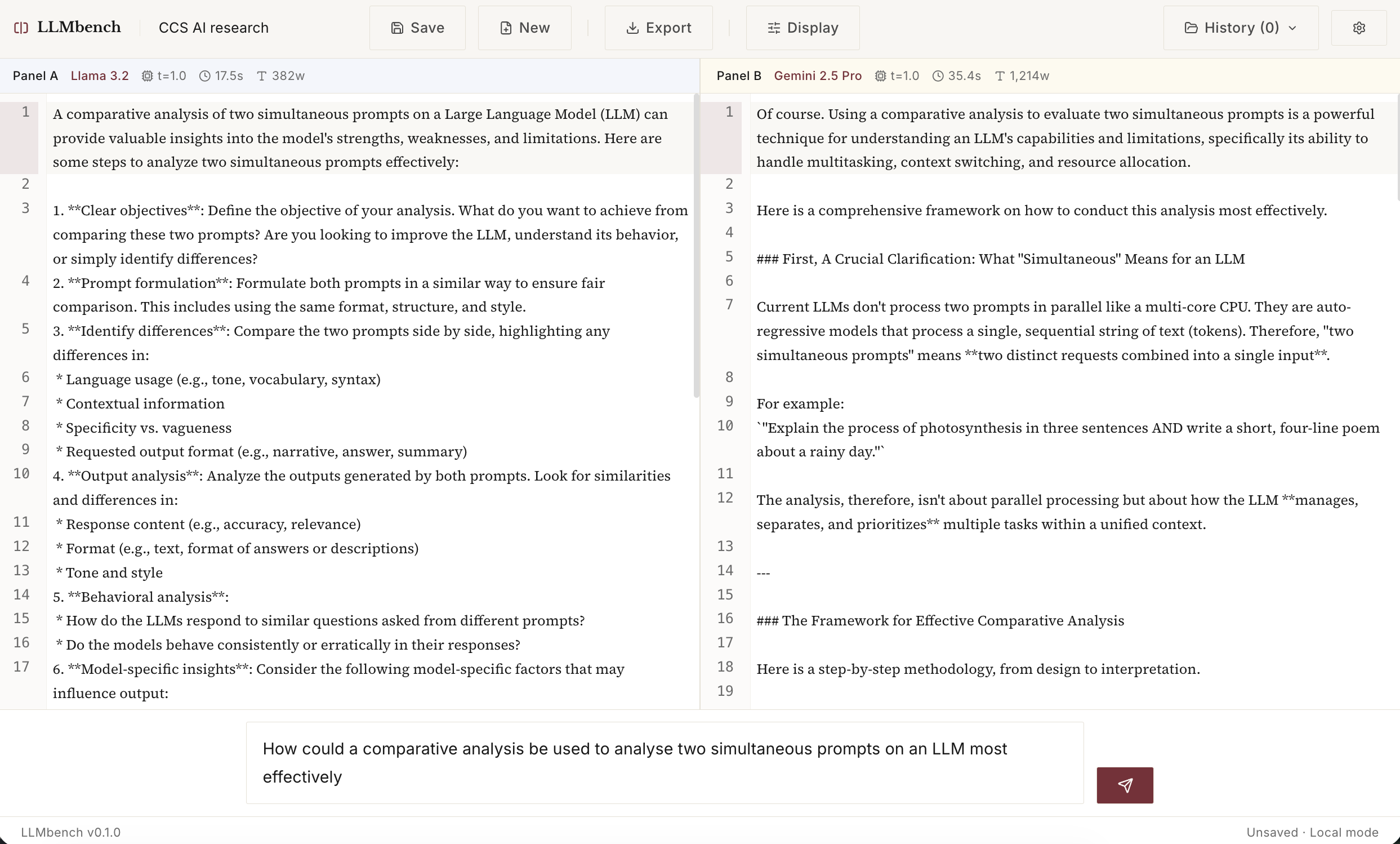

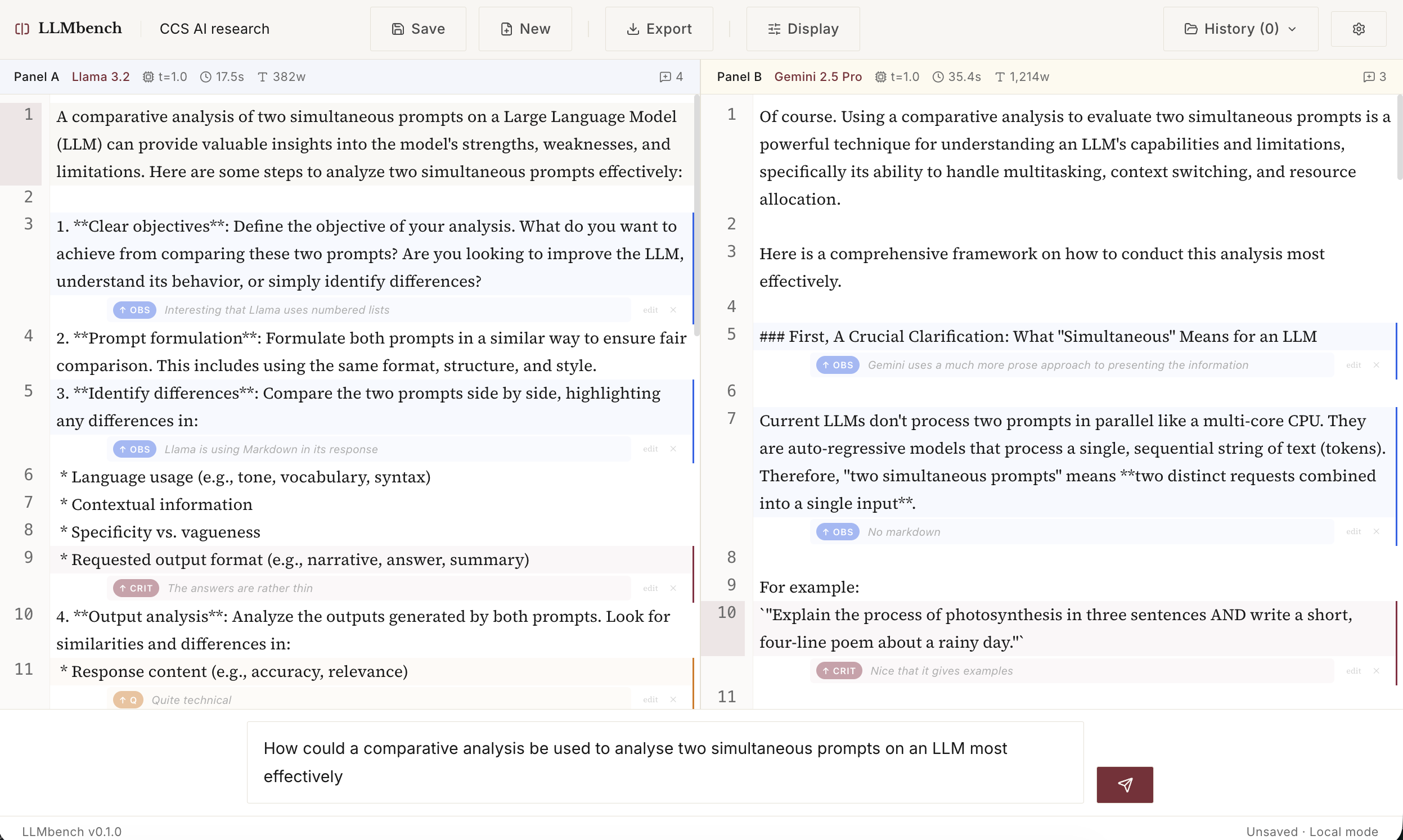

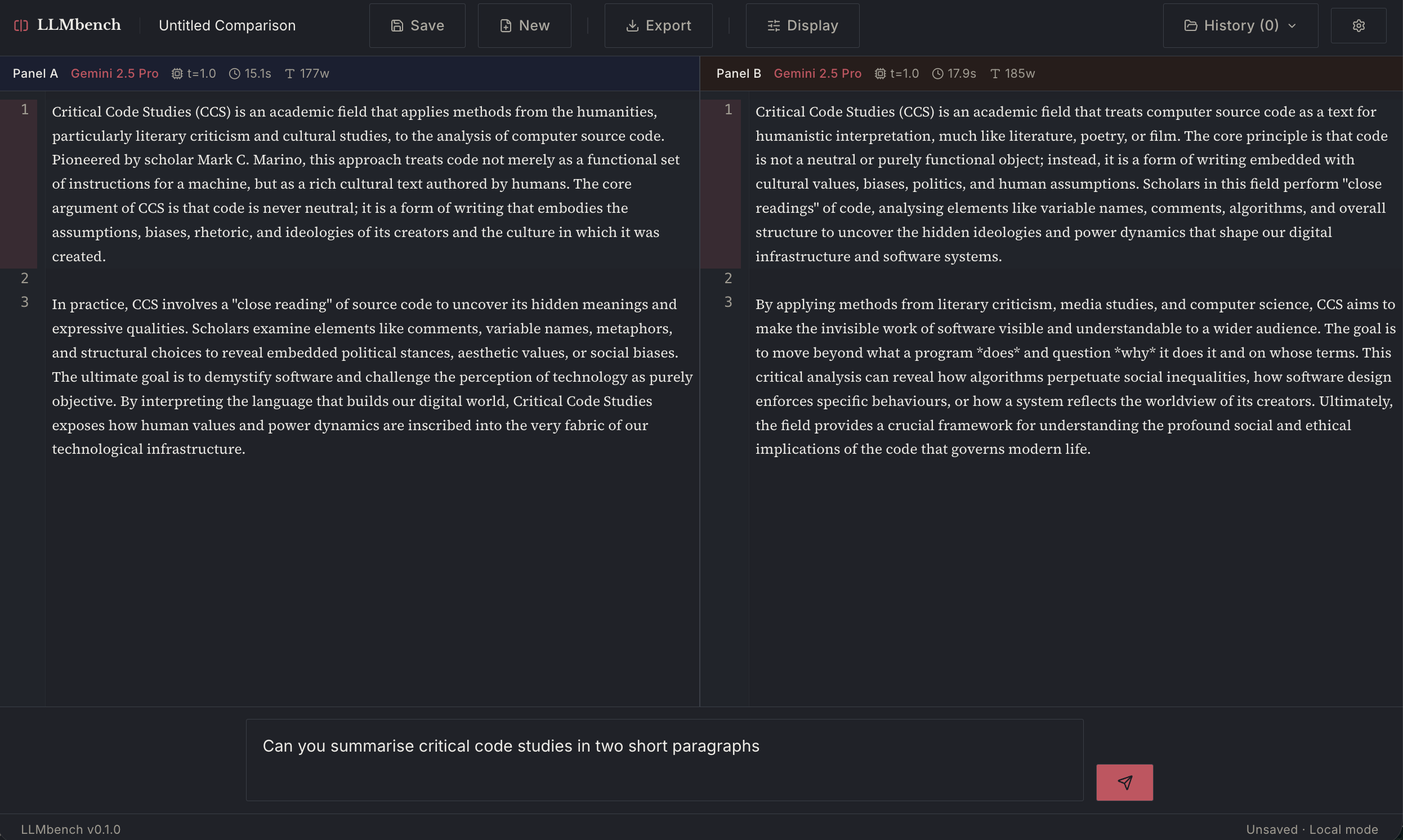

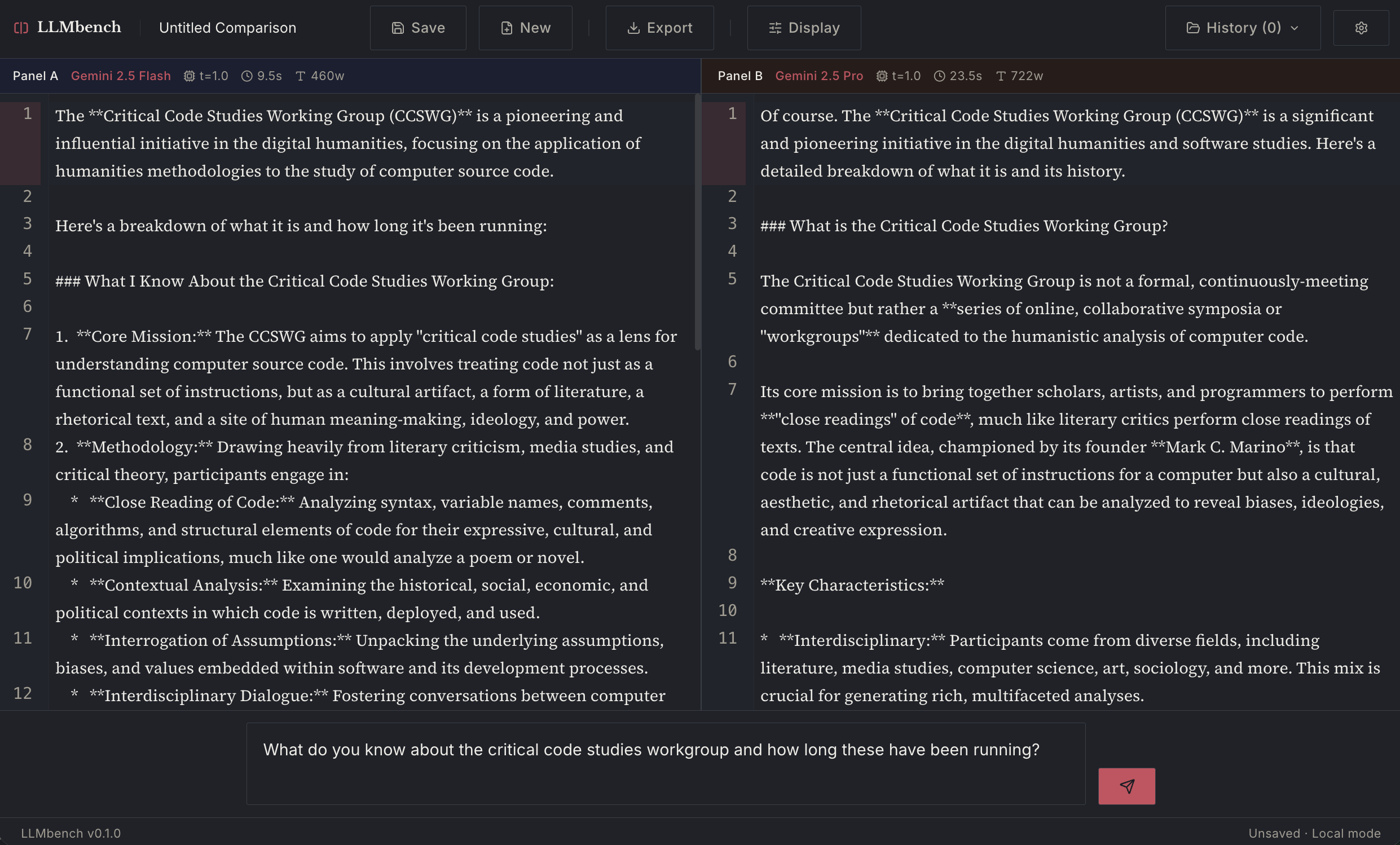

After the success of the CCS workbench, I have used the lessons learned from that tool to vibe code another new tool I call LLMbench. This new tool allows you to prompt two LLMs simultaneously with the same prompt and then use the reply to analyse it with annotations. These can then be exported to JSON/text or PDF for later use.

In the examples below you can see I have compared Llama 3.2 and Gemini 2.5 Pro, but you could also compare two models from the same family (e.g. Pro vs Flash or even the same LLM Pro vs Pro). See below.

It's still in early development so I don't have a deployment to share, but you can install it yourself by following the instructions here.

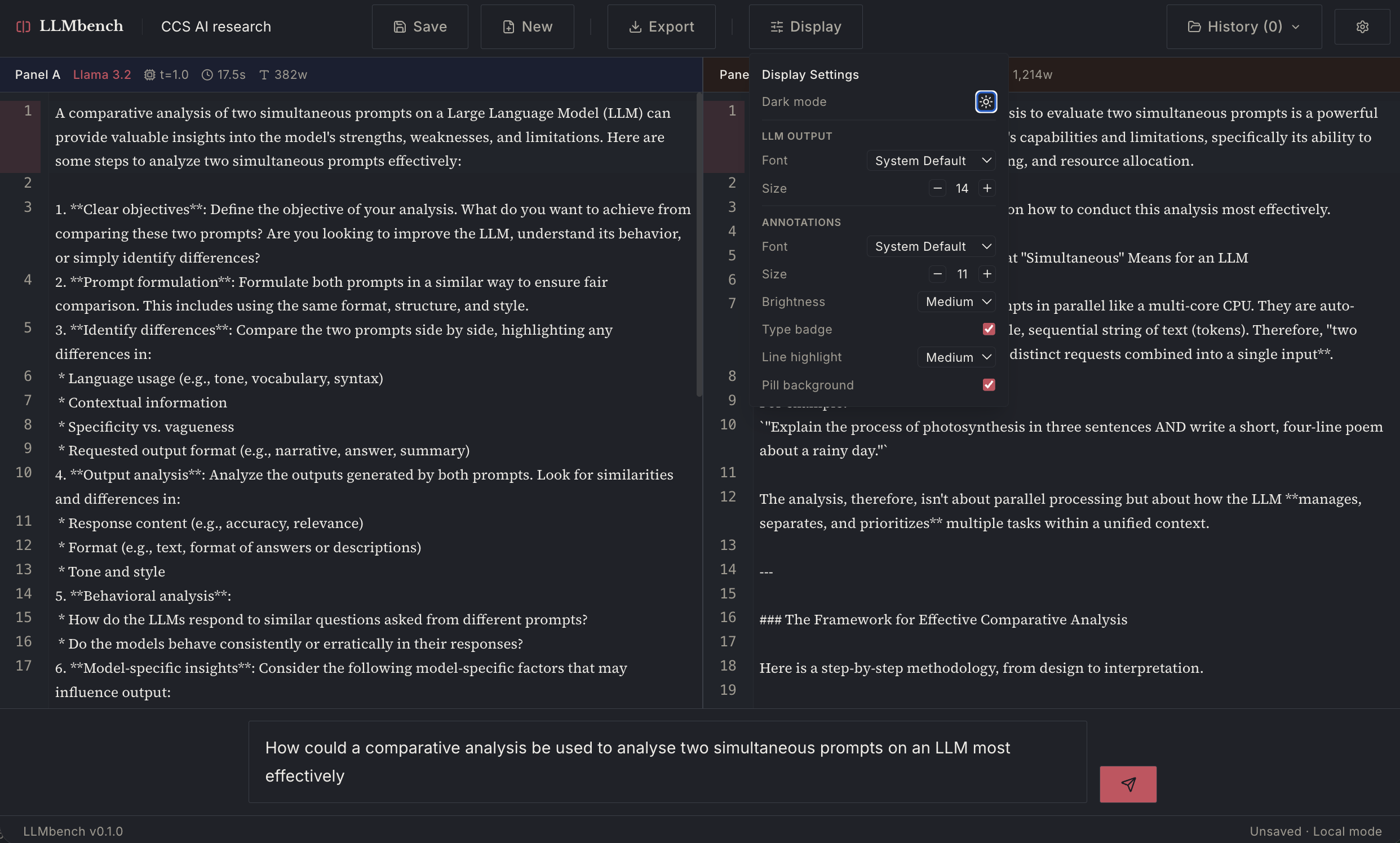

This is what it currently looks like (it already has a dark mode!).

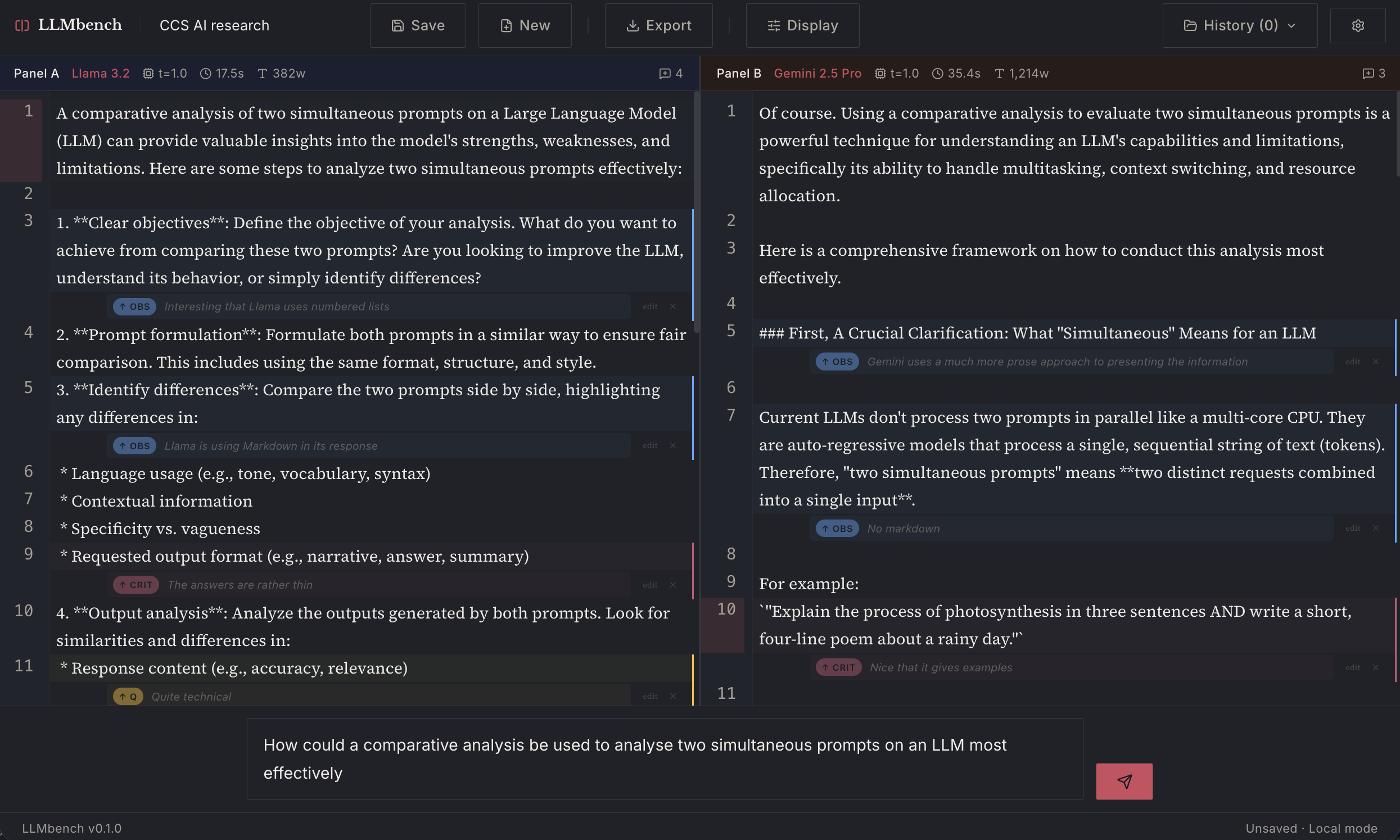

And here it is with annotation added:

Here are some examples of the same Gemini model family. In the first (Pro vs Pro) you can see the effects of the probabilistic generation in the slightly different outputs from the same prompt given to two instantiations of the model simultaneously.

The one above is Gemini Flash vs Pro.