Howdy, Stranger!

It looks like you're new here. If you want to get involved, click one of these buttons!

Categories

In this Discussion

Distant Writing: Code Critiques from NaNoGenMo and NNNGM

Distant Writing

How does a poet end up thinking in terms of algorithms, programming languages, and datasets?

I’m interested in exploring the work of writers of electronic literature who, instead of writing sequences of words directly, create a computer program or modify an existing one to generate their intended texts.

The practice of creating and repurposing “engines” encourage the development of born-digital poetic forms, such as Nick Montfort’s poem, “Taroko Gorge” which has been remixed hundreds of times since its publication in early 2009.

My notion of distant writing inverts Franco Moretti’s concept of distant reading, which is in turn a counterpoint to the hegemonic concept of close reading, by applying it to writing. With close reading we pay careful attention to a (presumably carefully chosen) sequence of words (let’s call it close writing) to draw meaning and insight from it— much of what we’re doing here at the CCSWG. With distant reading, Moretti invites us to develop tools and methods to examine large literary corpora to find patterns and insights about literary texts and movements. Distant writing subverts the notions of subjectivity, intimacy, and proximity in the Romantic vision of a writer who painstakingly chooses and arranges words in intended sequences. Distant Writing is about writers who create and implement algorithms to produce texts that achieve their goals.

We can extend the metaphor by conceptualizing varying distances between the writer and their text depending on how much control over the inner workings of the textual generation process the writer has. Here are some case studies, from short-distance to long-distance writing.

Short Distance Writing

Nick Montfort is well known in this community as a writer of generative works. Most of his oeuvre consists of carefully crafted minimalist generative literature. The openness of the MIT License these works are published in, and the versatility of his programming has encouraged others to remix and repurpose his works, as has been the case with “Taroko Gorge” (2009). For the code study, I want to present something more recent, his Nano-NaNoGenMo (NNNGM) novel consequence (2019). Here’s the code (in Perl) in its entirety:

perl -e 'sub n{(unpack"(A4)*","backbodybookcasedoorfacefacthandheadhomelifenamepartplayroomsidetimeweekwordworkyear")[rand 21]}print"consequence\nNick Montfort\n\na beginning";for(;$i<12500;$i++){print" & so a ".n;if(rand()<.6){print n}}print".\n"'

When you run that code in a terminal window, you get output like this.

consequence

Nick Montforta beginning & so a doorword & so a door & so a lifeweek & so a bodyname & so a handface & so a liferoom & so a nameplay & so a handdoor & so a time & so a bookplay & so a backcase & so a nameplay & so a caseweek & so a name & so a life & so a casepart & so a homeroom & so a doorword & so a book & so a yeartime & so a book & so a namehome & so a time & so a hand & so a lifefact & so a doorpart & so a work & so a roomlife & so a sideyear & so a yearhome & so a nameroom & so a part & so a roomfact & so a fact & so a handbody & so a workhand & so a timefact & so a playwork & so a homebody & so a headbody & so a homeback & so a headplay & so a bookwork & so a time & so a door & so a partbody & so a bodyhand & so a home & so a wordyear & so a play & so a room & so a facehead & so a head & so a roomdoor & so a hand & so a word & so a workside & so a caseweek & so a word & so a case & so a bodydoor & so a face & so a backwork & so a fact & so a timeface & so a yearcase & so a case & so a factplay & so a life & so a namename & so a time & so a fact & so a door & so a part & so a bodybody & so a faceyear & so a week & so a face & so a book & so a face & so a door & so a hometime & so a wordpart & so a lifeyear & so a weekyear & so a casebody & so a nameside & so a back & so a partdoor & so a sidebook & so a backpart & so a weekbook & so a part & so a

You can read the blog post about it here.

Some comments to get the conversation started:

- Part of the NNNGM constraint is to produce a 50,000 word novel (in the NaNoGenMo tradition) but with the added constraint of doing so with a program that is at most 256 characters.

- Montfort is experienced with this 256 character constraint, and created a series of generative poems titled ppg256 (2007-2012) where he developed the word compounding technique he uses here and in "Sea and Spar Between" (2010).

- By using exclusively 4-letter words, he was able to create a data set that could output common words and more poetically charged compound words. The constraint, which is very Oulipian, also a neat mechanism for code compression.

- The title, consequence, gestures towards that minimal foundation for plot--events connected by causality-- which is established by the connectors "& so a" in this novel. One thing leads to another in consequence all the way to its final period.

As distant writing goes, this is at a metaphorically short distance because the whole work is produced from this minimalist code and self-contained data set. Nick can manipulate the code and data set until it produces the output that fits his creative vision. Let's take another look at a similar, but more distant kind of writing.

Medium Distance Writing

OB-DCK; or, THE (SELFLESS) WHALE (2019) is also a NNNGM entry by Nick Montfort that transforms Herman Melville's Moby Dick into a version that removes all first person pronouns to remove the self, and the very narrator, from the novel.

Here's the complete code, though one must first download the text from Project Gutenberg and put it in the same directory you will be running the perl script from in a terminal window:

perl -0pe 's/.*?K/***/s;s/MOBY(.)DI/OB$1D/g;s/D.*r/Nick Montfort/;s/E W/E (SELFLESS) W/g;s/\b(I ?|me|my|myself|am|us|we|our|ourselves)\b//gi;s/\r\n\r\n//g;s/\r\n/ /g;s//\n\n/g;s/ +/ /g;s/(“?) ([,.;:]?)/$1$2/g;s/\nEnd .*//s' 2701-0.txt

Here's some of the output it generates:

Epilogue

“AND ONLY ESCAPED ALONE TO TELL THEE” Job.

The drama’s done. Why then here does any one step forth?—Because one did survive the wreck.

It so chanced, that after the Parsee’s disappearance, was he whom the Fates ordained to take the place of Ahab’s bowsman, when that bowsman assumed the vacant post; the same, who, when on the last day the three men were tossed from out of the rocking boat, was dropped astern. So, floating on the margin of the ensuing scene, and in full sight of it, when the halfspent suction of the sunk ship reached , was then, but slowly, drawn towards the closing vortex. When reached it, it had subsided to a creamy pool. Round and round, then, and ever contracting towards the button-like black bubble at the axis of that slowly wheeling circle, like another Ixion did revolve. Till, gaining that vital centre, the black bubble upward burst; and now, liberated by reason of its cunning spring, and, owing to its great buoyancy, rising with great force, the coffin life-buoy shot lengthwise from the sea, fell over, and floated by side. Buoyed up by that coffin, for almost one whole day and night, floated on a soft and dirgelike main. The unharming sharks, they glided by as if with padlocks on their mouths; the savage sea-hawks sailed with sheathed beaks. On the second day, a sail drew near, nearer, and picked up at last. It was the devious-cruising Rachel, that in her retracing search after her missing children, only found another orphan.

It is discussed in the same blog post I cited earlier, available here.

Some comments:

- By detecting and eliminating all personal pronouns from a novel narrated in the first person, Montfort has almost completely deleted Ishmael from the narrative. Clearly, "one did survive the wreck," but who exactly? Who is this narrator that tells the story of these colorful characters, Ishmael, Ahab, Queequeg, and a white whale?

- This kind of operation upon a novel hearkens back to Oulipian operations (such as the N+7 process, in which they would substitute nouns in a text with the seventh word that followed it in a dictionary to create a poetically distorted text), and is also firmly in the NaNoGenMo tradition, which draws heavily from Moby Dick.

- This program only produces one output from a text, though it could be used on other texts.

- The greater distance comes from the fact that it is built upon a text written by someone else. Montfort can modify the program to produce results that better achieve his artistic goals, but he can't change the main data set and still say it's Moby Dick.

Long Distance Writing

With long distance writing, the author either has limited control over the engine or the data set. I will not offer a code sample for long distance writing because, in most cases, it is either unavailable or impractical, but here are some examples and thoughts.

- Two of my favorite Twitter bots, @Pentametron by Ranjit Bhatnagar and @HaikuD2 by John Burger, search through the Twitter stream for tweets that happen to be in iambic pentameter and rhyme before retweeting them together or that could be cut into the shape of a haiku, respectively. One could argue that Bhatnagar and Burger haven't really written anything, and have only created the programs to distill rhyming couplets and accidental haiku from Twitter, but that would feel somehow unfair to the wonderful texts their bots produce. Their intent, craft, and execution results in works that wouldn't have existed without their vision, and that constitutes authorship, as far as I'm concerned.

- Bhatnagar's 2018 book Encomials: Sonnets from Pentametron explores the concept further by using different formal constraints to produce more complex poetic structures drawn from the raw material detected by @Pentametron. These feel less distant than his original bot, because we see Bhatnagar making more deliberate choices and refining his programming to produce output that more closely achieve his vision.

- Another popular method consists of training a Markov chain generator, neural network, AI framework, or chatterbot with texts and then prompting them to produce output based on the probabilities they detect. This year's NaNoGenMo featured several works that used GPT-2, an open AI framework. The authorial impulse is to train the frameworks, many of which operate as black boxes, to produce the kinds of output one desires.

- An example of this is The Orange Erotic Bible (2019), an anonymous work generated by the GPT-2 framework trained on a corpus of erotic literature and the King James Bible to produce erotic texts in the biblical tradition. You can see the code, corpora, and generated novel (Bible?) in the Github issue. The distance between the author and the text produced is substantive-- they didn't write any of the source texts, and performed limited operations on the output. Yet the results are so hilariously NSFW that I understand why the author chose anonymity to put some additional distance between themselves and the work.

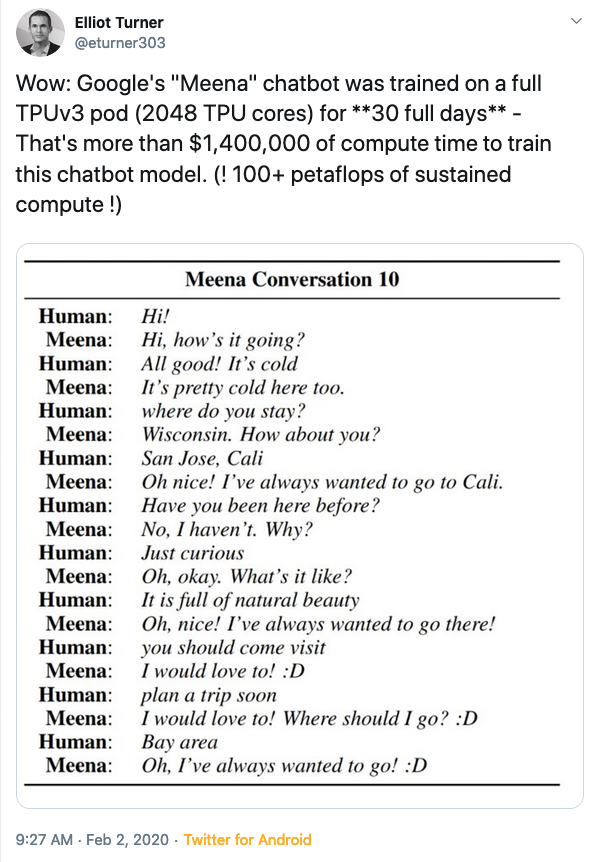

- This tweet refers to a case of distant writing (of a bot powered character) that feels most removed from the authorial team (Google?) than all the previous cases. Here we have the distance of corporate authorship (Google's AI team) and a chatbot whose operations are hidden in a hardware and software black box. The only information that achieves escape velocity from this corporate black box is news of the effort, resources, and processing feats achieved.

Closing In on Distant Writing

Now that I've introduced the concept of distance from one's writing and shared some examples, I invite commentary and critique: of the concept, the examples, and the code provided. I hope this generates some conversation!

Comments

Distant reading is an interesting approach for thinking about the aesthetics of generated writing and how the reader can engage with it. There's this idea in the philosophy of literature of taking a "fictive stance". When we read something, we can choose to treat it as fiction and thereby suspend our disbelief. With this stance comes a set of assumptions about the value of treating something as fiction. But many of the qualities that we expect from fiction are often not apparent in generated writing: thematic unity, consistency of character and setting, distinction between characters, distinction between dialog and description etc.

To appreciate a lot of generated writing, it's more fruitful that we take an interpretative stance that seeks meaning and expects to sift through incoherence to find it. In this way, appreciating generative writing is more like appreciating a conceptual art piece than appreciating a novel. Or to put it another way, most 'generated novels', and certainly all of the kind Nick Montfort has made, aren't actually novels, they are conceptual art pieces in the dress of a novel. A novel is a work that can afford us a fruitful experience through taking the fictive stance; a conceptual-art-piece-as-novel is better enjoyed through short, disengaged, interpretative reading. This stance might be encompassed by the idea of distant reading.

Hi Leonardo. Thanks for posting this critique! I'm glad for this opportunity to think more about NaNoGenMo, particular through the hermeneutics of distance you describe here. I started working in this direction in my ELO18 talk and I'm glad to return to it. (I have my slides online, and some of those ideas ended up in my contribution to the Kathi Berens' themed issue of JCWS -- I'm theoretically working on an article more closely following my ELO18 talk.)

If I remember correctly, I had originally proposed my talk with the title "Distant Reading / Distant Writing," or at least I think I used the phrase "distant writing" at some point in the talk, but in any case I think your delineation of "short" and "medium" distances are really important distinctions if the idea of distance is to be useful as a way to think about writing.

The great thing about the vocabulary you set up here is that we can use it to see how it accommodates different works in the NNGM corpus.

In terms of long distance writing, I think all of the many descendants of Travesty certainly make sense here (particularly Markov-chains and neural networks), both because the creator's proximity to the code (or at least the essential algorithm) is somewhat removed and because these approaches can incorporate large sets of text for training. That is, when I write a program that generates text using a Markov chain, I'm not really writing that algorithm, I'm using something that already exists, either literally (

import pymarkov) or figuratively by writing my own implementation of the idea.I like the idea and the examples of of medium-distance writing as well. I'd include examples like 50,000 Meows in this category because the relationship between the appropriated work and the new work is determinative, traceable, and easy to understand.

I'm still thinking about the short distance category and terminology. I can imagine why "close writing" might collide with "close reading," but the idea of close proximity in the generative workflow to me suggests something different than the minimalism you propose here. To me (and maybe this is just overflow from "close reading"), "close writing" would imply those NNGM works that are the more baroquely constructed, overdetermined, template-driven works wherein the writer's involvement in the final product is much more evident. I'm thinking of Twitter bots like Moth generator, simulation-driven novels like Martin O'Leary's Deserts of the West, or visually rich works like Liza Daly's Seraphs or natalia n's Shadows. For these three books, what we are likely to respond to aesthetically are those elements which we can confidently attribute to the author's creativity and craftsmanship as opposed to the stochastic or determinative forces that result in 50,000 Meows or World Clock.

Lots to think about!

Thank you for your generous elaboration of the concepts, @zachwhalen!

Buried in a parenthesis, I defined close writing as what we normally understand as writing. Let me unpack it further: what we understand as writing is when a person selects words and arranges them in sequences using writing implements to give them a material form that captures what is collectively called a text.

Close reading is what we reward "quality" close writing with, I suppose. And that is where the hegemony of the page begins to assert itself on how we even conceptualize reading, writing, aesthetics, and criticism.

@Leonardo.Flores

I had a few more thoughts on this, springing from some of the discussion on the Travesty Generator thread. I'm not sure exactly how to fit these ideas together, but one of the questions @markcmarino asks has to do with sharing code or not, and it occurs to me that there may be something similar in the typology here.

There's a strand of NNGM works, including some you quote here, where one can immediately understand the idea after a brief description or a small sample of code. These seem to be your "medium distance" type, and would include things like the aforementioned 50,000 Meows or Moby Dick (hehe) by matthewmcvickar. You can get the idea from the author's summary:

By reading the step-by-step explanation of what it does:

Or by viewing the source code. The crux of the operation seems to happen when the trigger words are defined:

Each of these views adds dimensions to our understanding of the idea, all of which are arguably superior to the experience of actually reading the whole modified novel.

Anyway, I don't know if this is true for all "medium distance" works, but what I like about "medium" is that I can understand the work as a relationship between the code and the resulting novel.

For your short distance pieces, especially the NNNGM pieces, the code must be the text because (after Cayle) the text here is not the text. I'm more interested in what @nickm can do with Perl than I am in what the resulting book can convince me of or make me feel. That text is a different kind of afterthought than is the case for Moby Dick (hehe), because Nick's printed text is the validation that this code actually does something.

So I don't know how widely this will fit, but from these examples it seems that there's a continuum of proximity that correlates with interest in code vs interest in output. As such, maybe this is another version of the dichotomy between the Tale-spin effect and the Eliza effect?

@Leonardo.Flores -- thank you so much for this.

Did you first float a related idea about "distant writing" in your 2013 post on "Pentametron"[1] and then have it cited by Rodley and Burrell in their 2014 chapter?[2] Or is there an even earlier version of this concept?[3]

My sense is that you have since moved in a different direction or evolved from data mining per se?

You articulate the "distance" of writing here as the writer's "control over the inner workings of the textual generation process," and then classify some examples as short-medium-long -- where "short" is high-control for the writer over the writing process, and "long" is low control. In the examples, smaller code and moreso smaller output (sonnet sized) as opposed to larger code and larger output (up through novels and to unlimited output generators) also roughly correlate with more versus less controlled. The examples are extremely useful, and the concept a fascinating one. You are also expanding an on important ongoing conversation about being in-control / out-of-control that is a longstanding problematic and form of play for electronic literature authors and critics. We can perhaps see it even in foundational works like Strachey's love letter generator (1952), which plays with the profusion of language and the mode of authentic sincerity in the love-letter genre. In criticism I'm also thinking for example of Jill Walker Rhetberg writing "Feral hypertext is no longer tame and domesticated, but is fundamentally out of our control."(2005)[4]

My main critique of these classified examples is that they may not (yet!) give us a useable heuristic for agreement when applying this concept of "distant writing" to a range of work. For example, when I attempted to classify some of the same examples I arrived at different results. I suspect that part of the problem for me is how we define the criteria for categories or the metric for a spectrum. Perhaps the very idea of writer "control" could be further focused, particularly when measuring the output of a writing process. When I tried to unpack it I took it to potentially mean that the output was writer "specified," or that it was writer "predictable," or that it was writer "authorized." From these forms of control, evidence that a text was out-of-control might then include:

So, for this Working Group's code critique of Sea and Spar Between and the fascinating story of a bug report, the subsequent discussion then produced multiple additional bug reports. The fact that these are even possible might be taken as prima facie evidence of the writing process operating out of intention, thus to some degree out of control, making it more "distant."[5]

In the case of algorithmic texts I suspect that working through such questions systematically would be something like reworking a revised form of Aarseth's Cybertext(1997)[6] and its "textonomy" (p65, 68) where certain concepts such as "dynamics" or "determinability" are reconsidered here, not as nominal/categorical scales, but as control-ratings considered primarily from a writing perspective rather than an ergodic reader / player perspective. We might then expect there to be a sum rating -- or principle components, like the ones Aarseth computed -- that would roughly correspond to how in-control or feral (how close or distant) we considered a given algorithmic writing process to be, depending on which dimensions we agreed to consider in that definition.

For consideration of whether such an approach can work if it is multidimensional, I think consideration of combined outliers on different scales would be most useful. Is a very small perl program that repeatedly surprises its author written more or less "distantly" from a novel-length rewrite that is deterministic and well understood -- or should these two forms of distance not be combined into one scale?

However such questions are resolved, I think that a useful spectrum would also be able to address traditional poetic composition: if "consequences" is short distance, what then is "Sonnet 130"? Shortest distance? Ideally a concept of writing distance would also have something to say about control in relation to other forms of software-mediated writing that are more quotidian, such as form letter email replies, auto-suggest text messages, or filing taxes online.

Flores L. “'Pentametron' by Ranjit Bhatnagar.” I Love E-Poetry. March 6, 2013. http://iloveepoetry.org/?p=48 ;↩︎

Rodley, Chris, and Andrew Burrell. "On the art of writing with data." The future of writing. Palgrave Macmillan, London, 2014. 77-89. "Flores wonders whether the technique of data-mining online text might be considered a kind of 'distant

writing'." ↩︎

As an aside, the most common citation for "Distant Writing" is Stevens, R. "Distant writing, a history of the telegraph companies in Britain between 1838 and 1868." (2007-2012). The title is a wordplay on the etymology of "telegraph" as 'distant' + 'writing'. This is the sense Landow uses in Hypertext 3.0 when writing "it is at precisely this period in human history we have acquired crucial intellectual distance from the book as object and as cultural product. First came distant writing (the telegraph), next came distant hearing (the telephone), which was followed by cinema and then the distant seeing of television." Landow, George P. Hypertext 3.0: Critical theory and new media in an era of globalization. JHU Press, 2006. p46. ↩︎

Walker, Jill. "Feral hypertext: when hypertext literature escapes control." Proceedings of the sixteenth ACM conference on Hypertext and hypermedia. 2005. http://jilltxt.net/txt/FeralHypertext.pdf ;↩︎

An interesting question beyond electronic literature is whether typos and errata in general (possibly including manuscript, editorial errors, and even printing errors) might be comparable evidence of writing "distance." ↩︎

Aarseth, Espen J. Cybertext: Perspectives on ergodic literature. JHU Press, 1997. ↩︎