Howdy, Stranger!

It looks like you're new here. If you want to get involved, click one of these buttons!

Categories

In this Discussion

Code Critique: AI and Animal Experimentation reading in Protosynthex III, 'There is a Monkey'

Title: ** Protosynthex III, Example 3 in ‘A Deductive Question-Answerer for Natural Language Inference’, https://dl.acm.org/doi/10.1145/362052.362058

**Authors: Robert M. Schwarcz, John F. Burger, Robert F. Simmons

Languages: Protosynthex III, perhaps LISP?

Year: 1970

I’ve been looking through a copy of Jean Sammet’s Programming Languages: History and Fundamentals (1969), particularly the sections on string processing languages and specialty languages. The latter contains a description of Protosynthex, which is a language designed to be used for question answering tasks. I was curious about this language because I’m interested in the history of AI, and 1960s AI research seems to have been tied very closely to linguistics and logical reasoning - arguably much closer than it is today. The Protosynthex code example in Sammet’s book is quite short, and it’s been difficult to find more examples. I did manage to find some example uses of Protosynthex III in a research paper from 1970 titled ‘A Deductive Question-Answerer for Natural Language Inference’, by Schwarcz, Burger, and Simmons. I’ve taken screenshots of the example code in Example 3 and included it in this thread as a PDF. I've also included the paper, where the code can also be read from pages 181-182. It’s not clear to me whether Protosynthex III is a distinct language like Protosynthex is, or if it is actually a form of the LISP language.

What I find interesting about the example code in _Example 3 _ (pp. 181-182), which I’ll call ‘There is a Monkey’, is that it appears to play out a scenario which could be seen in an animal experiment. ‘There is a Monkey’ declares a monkey, a box, and bananas. The bananas are positioned in such a way that they are only to be reached if the box is moved TO THE BANANAS. Towards the end of the example code a query reads DOES THE MONKEY GET THE BANANAS?, with the final line of code stating MONKEY REACH BANANAS. . The paper states that this example is “...adapted from an Advice Taker problem proposed by [John] McCarthy” (p. 174) in his ‘Situations, actions, and causal laws’, found in Stanford Artificial Intelligence Project Memo No. 2, July 1963. McCarthy is the inventor of the LISP language, which has been historically very popular in AI.

There’s no background given about why or how the monkey, box, and bananas have been brought together, what their size and distance from each other is, why or how the bananas are suspended, nor any information about where this event or simulation is taking place. Indeed, we do not really know if (THERE . PLACE) on line 7 even has a floor or walls, but I suspect that most people who read this code will assume that the event or scenario is not taking place in a monkey’s natural environment (do you agree or disagree?). This event or simulation could have instead easily been written by declaring a climbing tree instead of a box, but for whatever reason a human made object is declared as the means or tool for reaching the bananas. This seems very unnatural, as if an animal is being observed and tested in an experiment environment in order to collect evidence or to prove something. Of course, the actual purpose of this code is to prove or demonstrate what types of question answering experiments can be conducted using Protosynthex III. However, this in itself does not explain why a linguistic and AI experiment is performed as an event or simulation set up in a minimal, controlled environment designed to test if a monkey will reach its food. I therefore find it curious that the emerging field of AI in the 1960s would reproduce controversial forms of experimentation as seen in biology and psychology in its models. Perhaps there is a prestigious ‘scientific aesthetic’ that is trying to be emulated. Perhaps it’s just following what is perceived to be a convention. Perhaps you can see other readings?

The paper offers its own explanation of ‘There is a Monkey’, which I’ve copied here:

“Explanation. The monkey gets the bananas if he reaches them, and he can reach them if he stands on an elevated object under them and reaches for them; the monkey stands on the box, which is an elevated-object, and reaches for the bananas, and so if the box is under the bananas the monkey has succeeded in reaching them; the box is under the bananas if it is lower than they, which it is, and also at the bananas; the box is at the bananas if some animal has moved it to the bananas--which of course the monkey has done; therefore, the monkey reaches the bananas. The question-answerer follows just this reasoning path in answering the question (though in terms, of course, of the formal concept structure) ; the recombination of subgoal answers into an answer to the question is shown by the trace of STOGOAL in the Appendix. It is interesting to note here, of course, the input of inverses (converses) and inference rules by means of English sentences; with a few additional grammar rules and pieces of lexical information, properties could be input in this manner also”(p. 176).

Comments

@Lesia.Tkacz What a fascinating example, pointing to the curious researchers of the 1960s. This does look very LISP-y.

I wanted to begin by monkeying with the research. A little leg work might shed some light.

I found an McCarthy's example here.

The citation is "Situations, Actions, and Causal Laws." 3 July 1963. I wonder if the problem appears anywhere before this one?

That is followed by a passage that breaks down the logical inferences and deductions of the problem in symbolic form.

Perhaps one of these sources offers an earlier source: (These are cribbed from the end of McCarthy's article, copied from the PDF, so may have some errors.)

Intercourse Part I." Amsterdam (1960)

London, England; 1959

Studies (1958)

I would take a look at these articles next to try to get a sense of where this problem was framed prior, assuming it was. I did not find any references to monkeys in the 1959 text by McCarthy. There don't seem to be any monkeys in Minsky either, although he does begin with a visitor from another planet, who would be "puzzled" by our anxiety about and low regard for our the mechanical brains we've created.

Could the monkey problem be a popular formulation in the realms of logic? Or did he just pull this one from popular culture notions of behavioral animal experimentations.

According to this paper, An Approach Toward Answering English Questions From Text by Simmons, Burger, and Long (p.361), Protosynthex II was also written in LISP, although it doesn't give a version. I see from the first pdf you posted, @Lesia.Tkacz, that Protosynthex III was in LISP 1.5.

This language is new to me, but it looks like a dialect of LISP developed for natural language parsing.

Perhaps a monkey in a contained environment makes a good protagonist for box-world type parsing, like the robot in SHRDLU.

BTW, the Jean Sammet book is amazing and I regularly throw it in my bag to look through on my commute.

I believe that the "monkey gets banana indirectly" experimental forms that shapes Protosynthex III, Example 3 does have an earlier referent. While Schwarcz et al. are duplicating the code example in McCarthy, I suspect that McCarthy is computationally re-enacting (directly or perhaps indirectly) a simplified form of the much earlier animal intelligence studies that were first conducted around 1913-1915, published in 1916, and popularized in English thereafter in 1925.

At that time were two researchers working on field station tests of primate intelligence: Wolfgang Köhler, who worked at the Prussian Academy of Sciences Anthropoid Station in La Orotava, Tenerife in the Canary Islands from 1913-1916, and Robert Yerkes, who corresponded with Köhler and planned to work at the same research station but, due to the outbreak of WWI, instead worked at the Hamilton private laboratory in Montecito, California in 1915. Both men would later serve as Presidents of the American Psychological Association (Yerkes in 1917, Köhler in 1959); Köhler is perhaps best known in association with gestalt psychology. Each conducted numerous experiments on ideation / intelligence / problem solving with primates, and they initially reported on their work separately and a few months apart in 1916. Yerkes' monograph was The Mental Life of Monkeys and Apes: A Study of Ideational Behavior, (1916)[1] Köhler's just-earlier report Intelligenzprüfung an Anthropoiden was later published as a book in 1917[2] and even later translated into English in 1925 as The Mentality of Apes.[3]

Although I am not in the field, my impression in a brief review is that this kind of work with monkeys and apes was strikingly new at the time,[4] and that Köhler's work is perhaps better known (and considered "first", although this may be complex to unpack). Yet the specific form of the monkey-box-banana intelligence test seems to actually trace to Yerkes' work in 1915, and in particular the "Box Stacking Experiment" test from The Mental Life of Monkeys and Apes: A Study of Ideational Behavior[1]. This involved a cage with bananas suspended from the ceiling and two boxes -- one large, one small -- that the ape subject (a Bornean orangutan named Julius) had to not just move one box, but stack the two boxes in order to reach the bananas.

One of the fascinating things about the writeup by Yerkes (p88-99) is that it documents both the many attempts made by Julius and a brief comparison test done on a 3-year old human child (who failed to reach the bananas). It makes fascinating reading because there is so much going on in the testing other than deducing a correct sequence of steps manipulating the physical environment, including (especially) communication and modeling behavior. For example, it is clear that the human child and the orangutan both 'ideate' a solution to the problem fairly quickly, and it is a social solution: they each lead the tall scientist-observer by the hand to the middle of the cage and request that the observer retrieve the bananas. Julius additionally requests that the observer stand still and serve as a ladder. The observer notes these requests, but declines -- and declines to record them as correct ideational behavior.

Yerkes includes numerous photographic plates and diagrams. In addition, undated video attributed to Köhler's monkeys and apes are floating around on YouTube[5], and these clips showcase cooperation (as well as other related tests) -- although I haven't been able to date the videos, nor confirm that they are not from Yerkes or indeed someone else.

So this question, "(how) can an intelligence perform a multistage task?" does have a history that would make code performance with Protosynthex III a kind of simplified historical reenactment.

Yerkes, Robert Mearns. The Mental Life of Monkeys and Apes: A Study of Ideational Behavior. United Kingdom, H. Holt, 1916.

PDF: https://www.google.com/books/edition/The_Mental_Life_of_Monkeys_and_Apes

Plaintext: http://www.gutenberg.org/cache/epub/10843/pg10843-images.html ;↩︎ ↩︎

Wolfgang Köhler. Intelligenzprüfungen an Anthropoiden, Berlin, 1917. ↩︎

Ruiz, Gabriel, and Natividad Sánchez. "Wolfgang Köhler’s the Mentality of Apes and the Animal Psychology of his Time." The Spanish journal of psychology 17 (2014). e69, 1–25. ↩︎

Frithjof Nungesser in "Mead Meets Tomasello" (The Timeliness of George Herbert Mead) (2015) p258, 270: "From an evolutionary perspective, it may appear odd that there are hardly any primates in Mead's argument. After all, already Darwin emphasized the extraordinary similarities between human and nonhuman primates. Yet, one has to keep in mind that the first pertinent psychological studies of monkeys and apes were published in 1916 and 1917, respectively." ↩︎

The footage attributed to Kohler is also found excerpted in TV specials and documentaries online, e.g. youtu.be/6-YWrPzsmEE?t=63 ↩︎

Thanks @markcmarino!

I was only able to get hold of the first page of Prior, and only a review of von Wright - no monkey mentions in either from what I could see. I was however able to find a copy of Freudenthal, which stood out from the other two because it describes the LINCOS international (or should I say interplanetary) auxiliary language. This is a language designed to be radio transmitted and understood by alien species. The first transmission is supposed to start off by indicating numbers, then arithmetic, and then increasingly complex types of logic. However, there are no references to monkeys in the book cited. I was able to ask an older faculty member in the Computer Science department if they had ever come across a 'monkey theme' in logic examples, but no luck there either.

Thanks @Temkin! I was hoping to find a list of microworlds to see if any remind me of animal experimentation, but I haven't come across one yet.

On twitter, James Ryan also associated 'There is a Monkey' with microworlds:

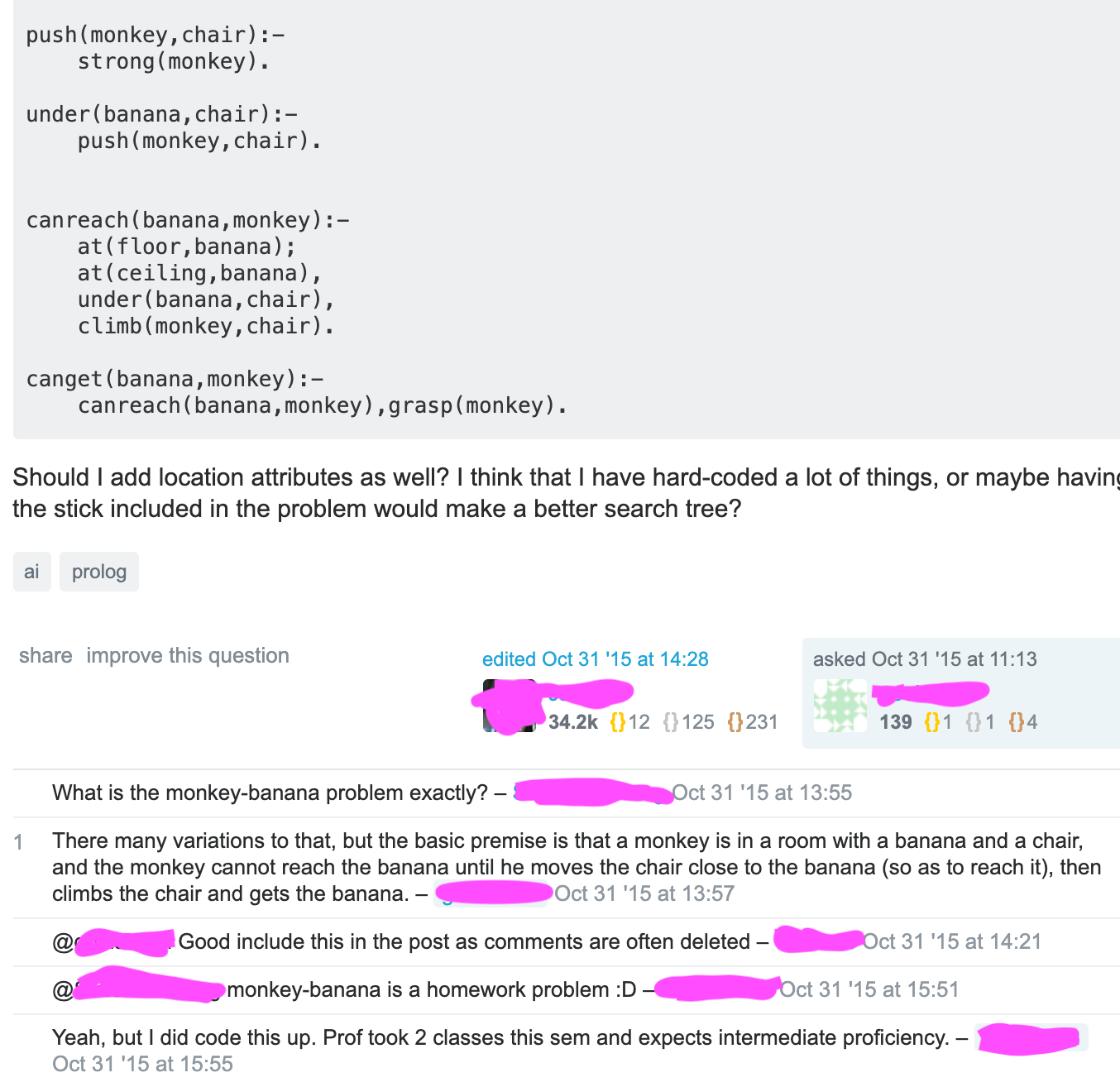

I'm more familiar with Prolog than LISP, which was also a popular AI programming language although it was developed in 1972. I searched for 'prolog monkey' in a search engine and got several results back with phrases along the lines of ''monkey-banana problem" from Stack Overflow and other code snippet and help sites.

https://duckduckgo.com/?q=Prolog+monkey&atb=v165-1&ia=web

For example, on this particular Stack Exchange thread it is referred to as The monkey-banana problem, and also a monkey and banana problem. It is also referred to as a homework problem, which could indicate that the concept/senario has become somewhat typical or standard:

I did also happen to find a paper titled 'Decision Theory and Artificial Intelligence

II: The Hungry Monkey', by Feldman and Sproull, 1977. Here, the monkey and banana problem is presented as already being an established theme in early AI. If the uncited statement below is accurate, then it may suggest that by the late 1970s monkey and banana senarios could have become a convention in AI programming examples:

"The central problem addressed in this paper is that of planning and acting.

This is a core issue in AI and becomes increasingly important as we begin to

apply AI techniques to real problems. The vehicle used for discussion in this

' paper is the classic "Monkey and Bananas" problem. It is a simple, well-known

problem which can be extended naturally to include many of the basic issues we

wish to address." pp. 159-160.

Wow, thanks for this very in-depth find and reading, @jeremydouglass! The detail you mention about the observer declining to record social solutions as correct behavior really highlights the fact that they do not have total control over the situation, despite wanting to play out their own specific version of it. It almost seems as if the observer actually needs the Protosynthex III version of the senario, where the world is artificially empty, social solutions are very literally omitted, and control is total.