Howdy, Stranger!

It looks like you're new here. If you want to get involved, click one of these buttons!

Categories

In this Discussion

Critical Code Studies Workbench

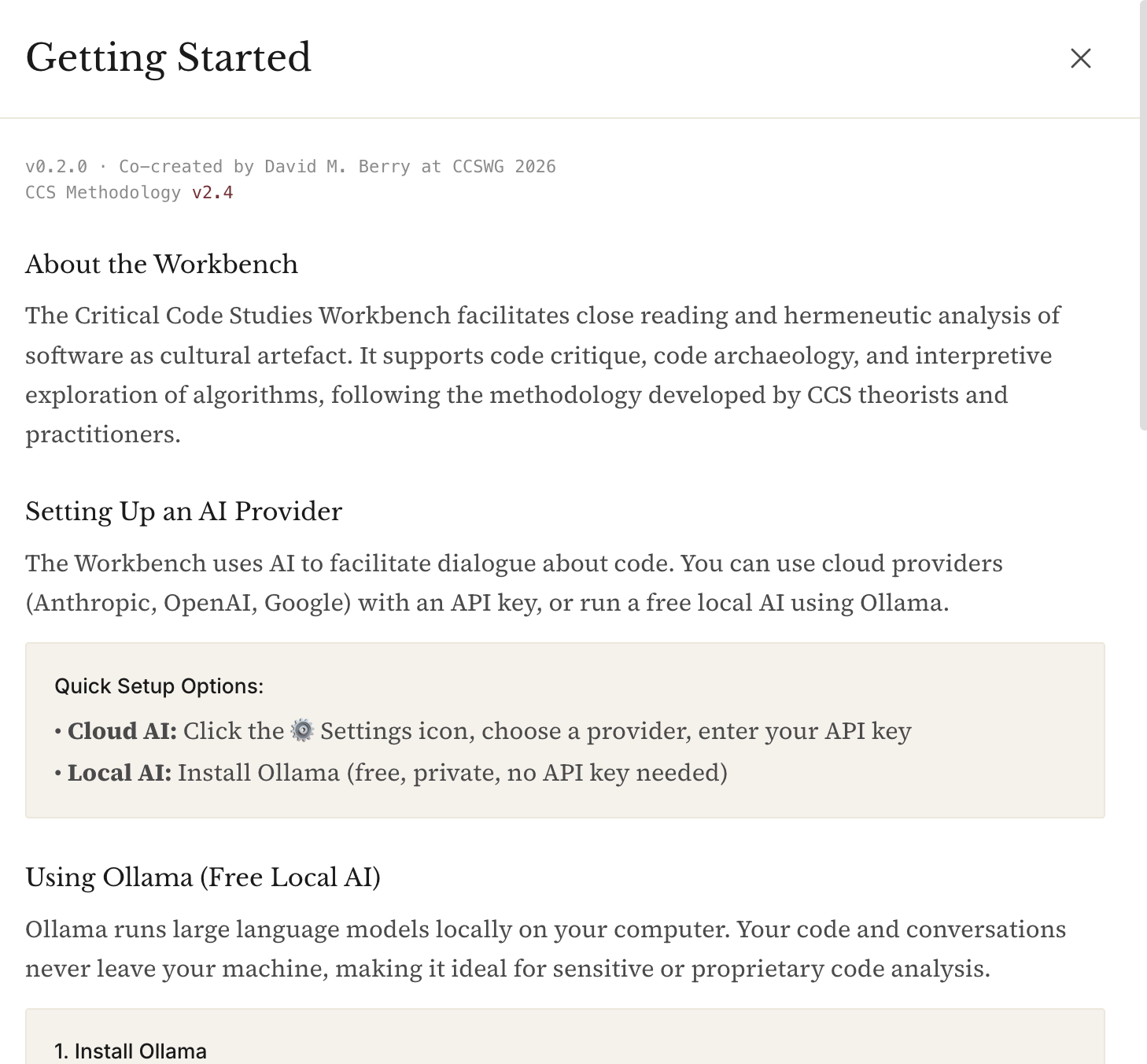

Inspired by the theme of vibe coding this week I decided to try to actualise a workbench for working in critical code studies projects. I used Claude Code to do the heavy lifting and I now have a usable version that can be downloaded and run on your computer. This is a version 1.0 so things may not always work correctly but I think that it offers a potential for CCS work that democratises access to the methods and approaches of CCS and makes for a (potentially) powerful teaching tool.

It uses a local LLM, Ollama, which you will need to download and install, but thats pretty easy (but should (!) be able to use an API key to talk to a more powerful LLM, if you want)

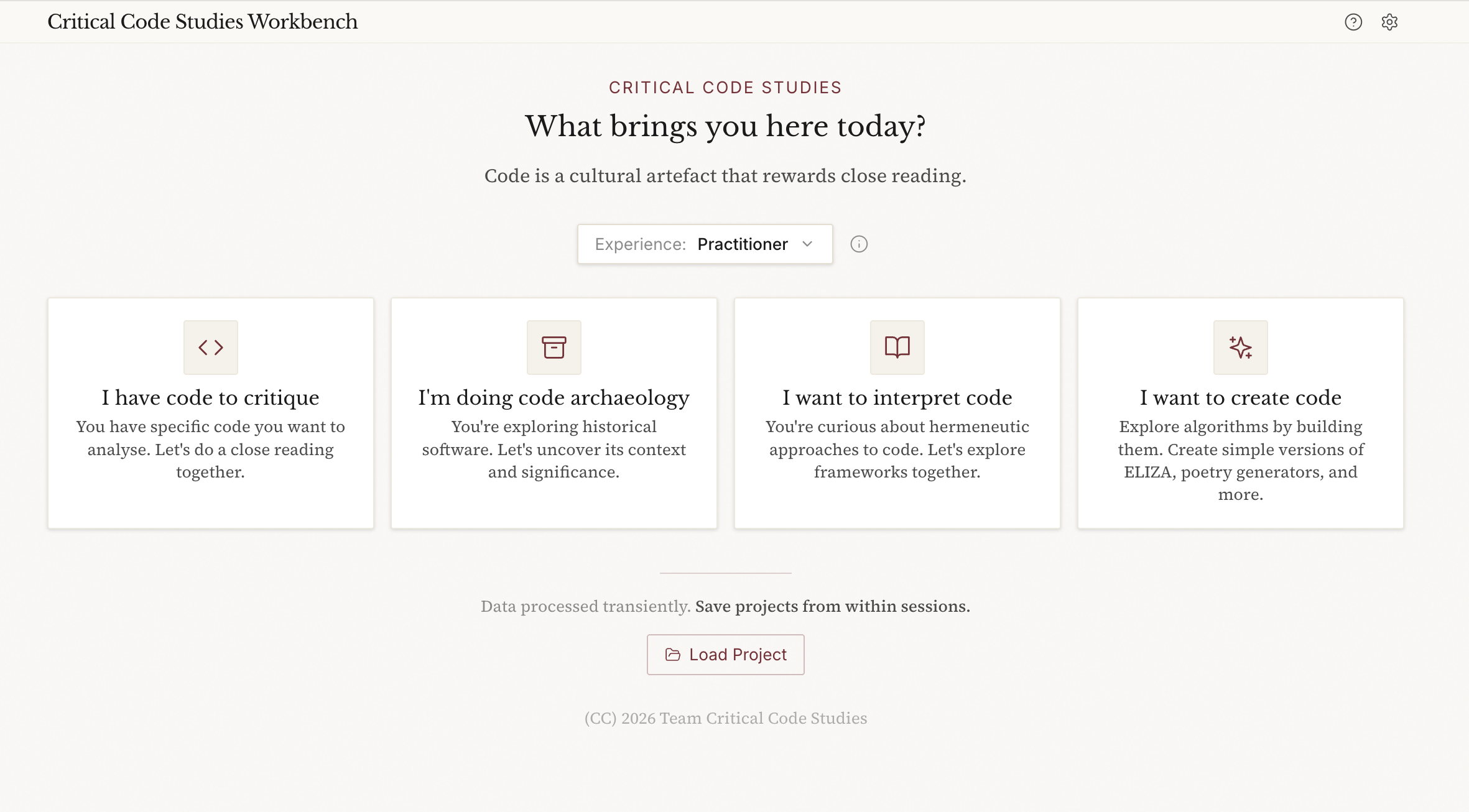

This is how the main page looks:

The Critical Code Studies Workbench facilitates rigorous interpretation of code through the lens of critical code studies methodology. It supports:

- Code critique - Close reading, annotation, and interpretation in the Marino tradition

- Hermeneutic analysis - Navigating the triadic structure of human intention, computational generation, and executable code

- Code archaeology - Analysing historical software in its original context

- Vibe coding - Creating code to understand algorithms through building

Software deserves close reading here is a tool to help us. The Workbench helps scholars engage with code as meaningful text.

Note that the CCS Workbench has a built in LLM facility. This means that you can chat to the LLM whilst code annotating, ask it to help with suggestions, give interpretations of the code, etc. whilst you are working. The other modes are more conversational (archaeology/interpretation/create) and allow a more fluid way of working with code and ideas. There is a quite sophisticated search for references whilst you are chatting in the latter three modes so you can connect to CCS literature (and wider) from within the tool.

The Workbench saves project files based on the mode you are in and you can open them from the main page and then it will put you back in the session where you left off. You can also export the session in JSON/Text/PDF for writing up in an academic paper, etc.

Features

Entry Modes

- I have code to critique: IDE-style three-panel layout for close reading with inline annotations

- I'm doing code archaeology: Exploring historical software with attention to context

- I want to interpret code: Developing hermeneutic frameworks and approaches

- I want to create code: Explore algorithms by building them (vibe coding)

Experience Levels

The assistant adapts its engagement style based on your experience:

- Learning: Explains CCS concepts, offers scaffolding, suggests readings

- Practitioner: Uses vocabulary freely, focuses on analysis

- Research: Engages as peer, challenges interpretations, technical depth

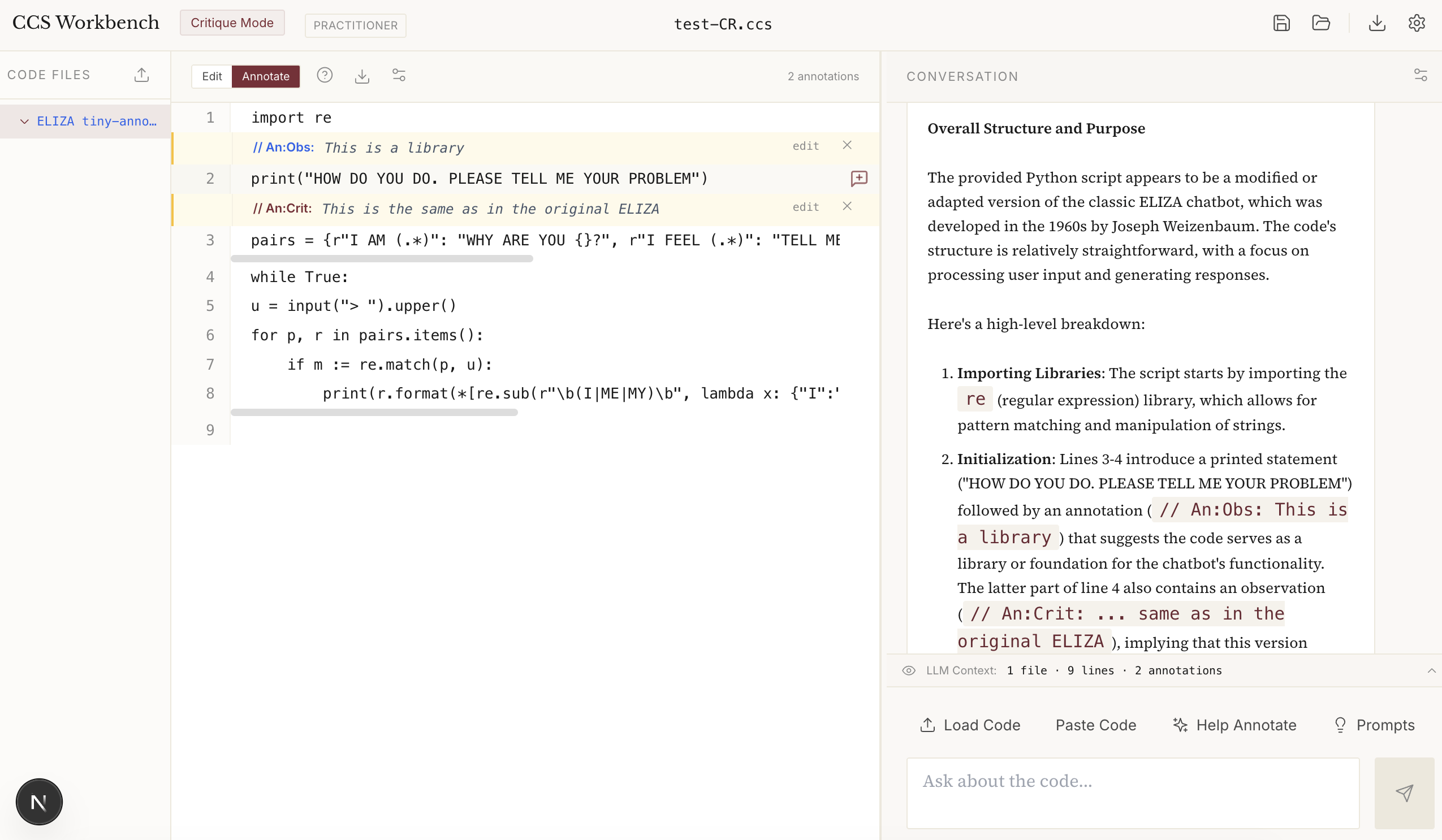

IDE-Style Critique Layout

The critique mode features a three-panel layout for focused code analysis:

Left panel: File tree with colour-coded filenames by type

- Blue: Code files (Python, JavaScript, etc.)

- Orange: Web files (HTML, CSS, JSX)

- Green: Data files (JSON, YAML, XML)

- Amber: Shell scripts

- Grey: Text and other files

Centre panel: Code editor with line numbers

- Toggle between Edit and Annotate modes

- Click any line to add an annotation

- Six annotation types: Observation, Question, Metaphor, Pattern, Context, Critique

- Annotations display inline as

// An:Type: content - Download annotated code with annotations preserved

- Customisable font size and display settings

Right panel: Chat interface with guided prompts

- Context preview shows what the LLM sees

- Phase-appropriate questions guide analysis

- "Help Annotate" asks the LLM to suggest annotations

- Resizable panel divider (drag to resize)

- Customisable chat font size

Project Management

- Save/Load projects as

.ccsfiles (JSON internally) - Load Project button on landing page auto-detects mode

- Export session logs in JSON, Text, or PDF format for research documentation

- Session logs include metadata, annotated code, full conversation, and statistics

- Click filename in header to rename project

Download the software from https://github.com/dmberry/CCS-WB/blob/main/README.md

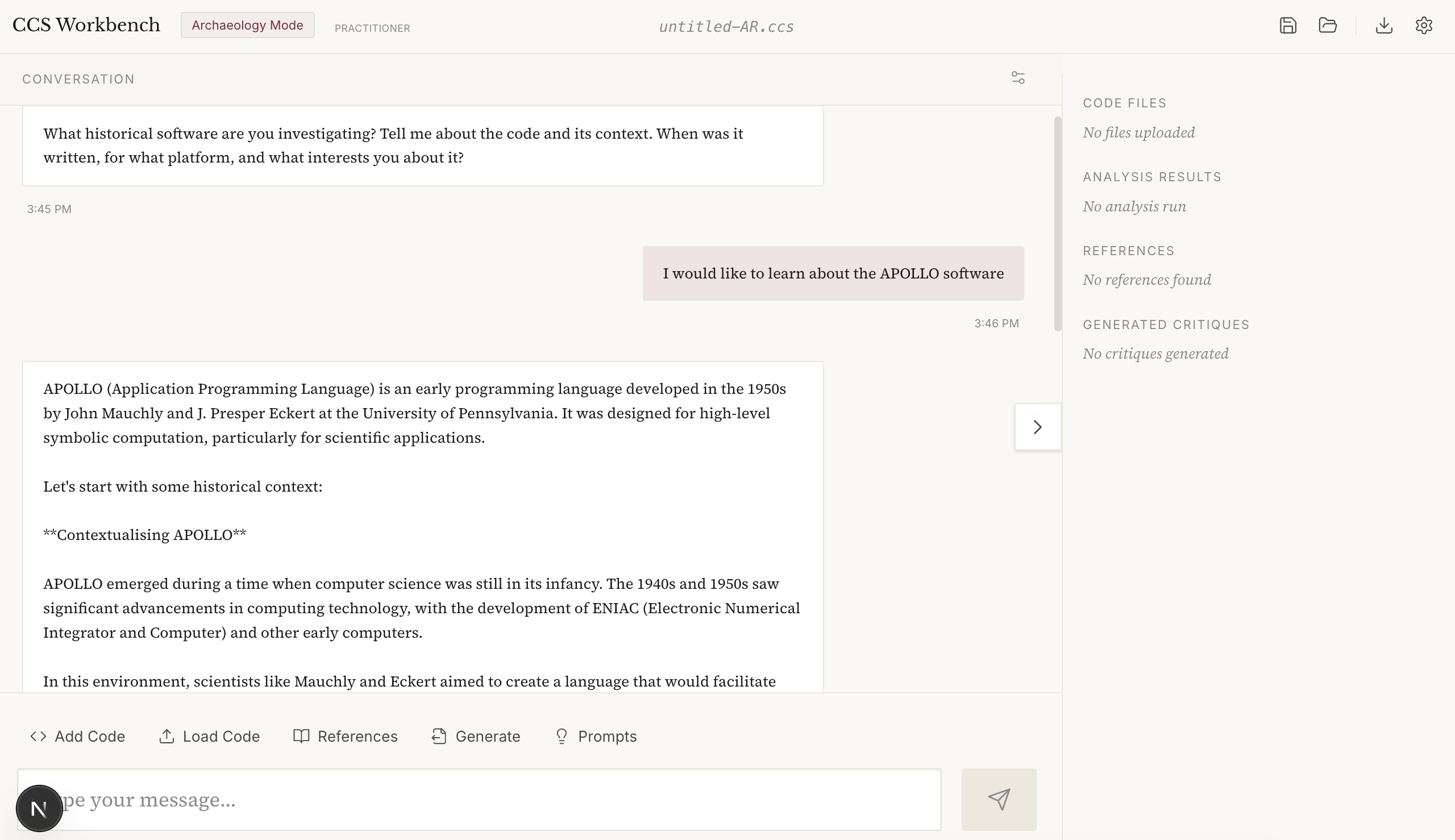

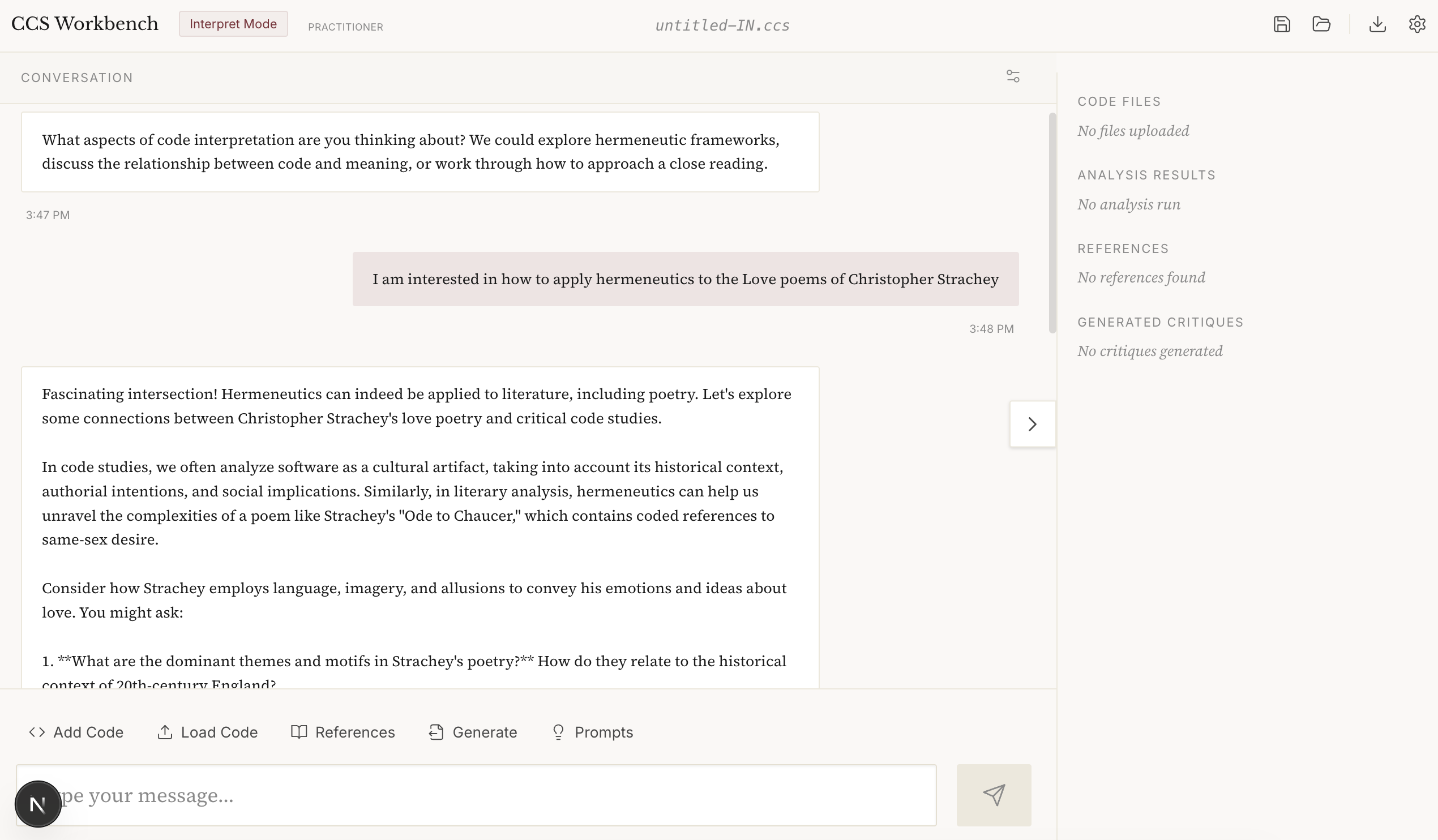

Here are some screenshots:

CCS Archeology Mode

CCS Interpretation Mode (i.e. Hermeneutics)

CCS Create Code Mode (Vibe Coding)

See the help icon for more information (or detailed instructions in the project README.md file:

Comments

Oh, very cool. This inspired me to this: https://claude.ai/share/083376a6-3648-40dd-a097-d8841259899d

@jshrager And what do you think of its reading?

I am glad that LLMs can reply to these sorts of prompts, personally. @jeremydouglass and I were just remarking on the advantages of these models having trained on texts of Critical Code Studies, though of course not always with permission.

Thinking back to Week 1, I wonder if we might get a little more out of choosing a particular critical lens for it to apply.

Maybe if you have it suggest some approaches from various schools of critical theory and the choose one for it to approach.

But that does make this interpretation business into a bit of a game when I think it usually begins with a question or even a hunch. A critical inquiry. A focus of reflection that gets crystalized when it hits an object of study.

For example, there are lots of critical approaches that reflect on identity (like the psychoanalytic approaches: Lacan, Irigaray -- not to forget a Rogerian approach!) or post-humanism (Hayles) or cyborg theories (Haraway). As you know from our book, I have chatbots through the lens of gender performance (Butler). I know you also like theories from cognitive and neuroscience, so maybe try one of those.

In other words, the word hermeneutic might now be sufficient without pointing it to a particular hermeneutic approach.

If you ask the LLM, what parts of this code could be used in a critical code studies reading of this basic ELIZA in terms of (then choose one of those theories or theorists to fill in this blank) as I reflect on (fill in this blank with some philosophical or critical question), what does that yield?

@markcmarino Re: What I thought of Claude reading my code ...

This was sort of interesting:

"But here's the hermeneutic violence: the program assumes that merely swapping pronouns creates meaningful reflection. If you say "I FEEL you are wrong", it becomes "I FEEL I am wrong" - a grammatical transformation that may create psychological non-sense."

I love the term "hermeneutic violence"! :-) However, I feel that it's wrong that "I feel I am wrong" is a nonsensical response to "I feel you are wrong"; The speaker might have convinced the listener, or the listener might be musing over whether they actually feel they are wrong.

In creating the critical code studies workbench, I thought it would be useful to distill what a critical code studies approach/method would be into a "skill" for the LLM. This means I gave an LLM a collection of CCS texts to read and asked it to convert their contents into a knowledge skill (rather like knowledge elicitation for an Expert System) for the AI so that it could apply CCS in practice. This hones its ability to focus on the task within an expert domain, rather than just ask a vanilla LLM to apply a (random) CCS method. This dramatically changes the ability of an LLM to understand what you want, and suggests a good way to do it.

In the end this Skill file proved too large (particularly for Ollama) for I had to compact it rather dramatically into this version (note this is also designed for progressive loading of the right skillset for the right mode in the workbench).

But I think this is still a useful distillation of the critical code studies methodology and you can paste it into an LLM to "teach" it how to do a CCS reading to preload it, as it were.

The full Critical Code Studies LLM Skill is here

The idea that you can take a collection of books and papers and convert it into a "skill" for an AI is super interesting. I think it is also suggestive of how CCS can operationalise its approach and method, particularly for teaching and research, where it can be difficult to understand how it is meant to function.

Of course, this skill is also extendable so that multiple approaches to CCS can be "taught" to it via an LLM (or hand edited by a human). I can imagine a number of improvements that could be made to this 1.0 version of the CCS skill – but this would also require compaction as current LLMs have a limited context window (250k for Clause, 1 million for Gemini) and the bigger this file gets the more tokens are used in parsing it by the LLM – hence the contraction I referred to earlier for the CCS workbench (as Ollama has a much smaller context window, 128K tokens).

Hi @jshrager Is Claude's interpretation correct? Wouldn't the program respond to "I FEEL you are wrong" with only one of these?

"DOES THAT TROUBLE YOU?"

"TELL ME MORE ABOUT SUCH FEELINGS."

"DO YOU OFTEN FEEL I AM WRONG"

"DO YOU ENJOY FEELING I AM WRONG"

@anthony_hay I don't know. I'd have to run the program to find out exactly, but I don't think that this matters much. If you and I were talking about this sort of thing, I wouldn't expect you to be able to generate exactly correct examples to make this sort of point. In fact, it's this precise sort of flexibility that makes LLMs so useful, and at the same time so frustrating. Often we want some flexibility -- creativity, if you like -- say in envisioning the UI of something you're building, but when it comes time to implement, we want to drill down and get the details right -- a process sometimes called "cognitive zoom", but these have no capability to engage in cognitive zoom, so they treat programming the same way they treat poetry. As a result, possibly 2/3rds of the interactions I have had over several years of AI-assisted coding involves getting the system to find stupid mistakes that no real person would make, like not balancing their divs. (To be fair, a real person might make such a mistake, possibly more often than an LLM if left to their own devices. But "real" engineers know that this sort of thing is important and so we have specific machinery building into our IDEs that do this sort of thing for us. This is the core of the problem; LLMs depend on fancy statistical processes for EVERYTHING. Now, again, to be fair, the AI folks realize this, but unfortunately, aside from paren balancing and a couple of other things, like arithmetic, it has been previously demonstrated (actually not, but that's a separate thread) ... it is believed, let's say, that we can't build such "expert systems" for complex tasks. So what LLMs have basically done is to reduce AI to a previously unsolved problem.)