Howdy, Stranger!

It looks like you're new here. If you want to get involved, click one of these buttons!

Categories

In this Discussion

2024 Participants: Hannah Ackermans * Sara Alsherif * Leonardo Aranda * Brian Arechiga * Jonathan Armoza * Stephanie E. August * Martin Bartelmus * Patsy Baudoin * Liat Berdugo * David Berry * Jason Boyd * Kevin Brock * Evan Buswell * Claire Carroll * John Cayley * Slavica Ceperkovic * Edmond Chang * Sarah Ciston * Lyr Colin * Daniel Cox * Christina Cuneo * Orla Delaney * Pierre Depaz * Ranjodh Singh Dhaliwal * Koundinya Dhulipalla * Samuel DiBella * Craig Dietrich * Quinn Dombrowski * Kevin Driscoll * Lai-Tze Fan * Max Feinstein * Meredith Finkelstein * Leonardo Flores * Cyril Focht * Gwen Foo * Federica Frabetti * Jordan Freitas * Erika FülöP * Sam Goree * Gulsen Guler * Anthony Hay * SHAWNÉ MICHAELAIN HOLLOWAY * Brendan Howell * Minh Hua * Amira Jarmakani * Dennis Jerz * Joey Jones * Ted Kafala * Titaÿna Kauffmann-Will * Darius Kazemi * andrea kim * Joey King * Ryan Leach * cynthia li * Judy Malloy * Zachary Mann * Marian Mazzone * Chris McGuinness * Yasemin Melek * Pablo Miranda Carranza * Jarah Moesch * Matt Nish-Lapidus * Yoehan Oh * Steven Oscherwitz * Stefano Penge * Marta Pérez-Campos * Jan-Christian Petersen * gripp prime * Rita Raley * Nicholas Raphael * Arpita Rathod * Amit Ray * Thorsten Ries * Abby Rinaldi * Mark Sample * Valérie Schafer * Carly Schnitzler * Arthur Schwarz * Lyle Skains * Rory Solomon * Winnie Soon * Harlin/Hayley Steele * Marylyn Tan * Daniel Temkin * Murielle Sandra Tiako Djomatchoua * Anna Tito * Introna Tommie * Fereshteh Toosi * Paige Treebridge * Lee Tusman * Joris J.van Zundert * Annette Vee * Dan Verständig * Yohanna Waliya * Shu Wan * Peggy WEIL * Jacque Wernimont * Katherine Yang * Zach Whalen * Elea Zhong * TengChao Zhou

CCSWG 2024 is coordinated by Lyr Colin (USC), Andrea Kim (USC), Elea Zhong (USC), Zachary Mann (USC), Jeremy Douglass (UCSB), and Mark C. Marino (USC) . Sponsored by the Humanities and Critical Code Studies Lab (USC), and the Digital Arts and Humanities Commons (UCSB).

#critcode Tweets

Sexist Autocomplete: Interventions?

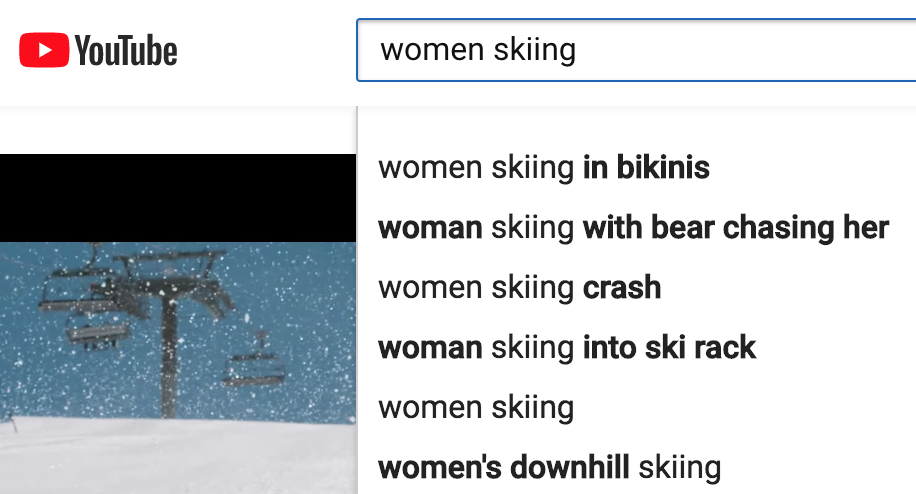

I searched "women skiing" but autocomplete serves up women as sex objects and being humiliated for the first four returns. Who is writing these algorithms? Sexism is invisibly baked into so much of our virtual world.

This prompts me to think about the code we can't see, the invisible rules that validate sexism by reinscribing it.

Comments

I'm thinking also about Lauren Klein's MLA 2018 talk "Distant Reading After Moretti." In this talk Lauren observes of distant reading and topic modeling:

I suppose I want to suggest in this discussion about gender and programming culture is that the interventions should happen in code and output. But how to intervene in the programming culture that fundamentally conceives of women's achievement as humiliation?

Thanks Kathi,

I totally agree with you and with Lauren in posing these questions about invisibility, intervention, and women's presence in the history of computing.

I'm actually working on a project involving Ada Lovelace and Mary Somerville right now, revolving around the question of materialism and feminism in nineteenth-century media archaeology. The interesting question for me with these two figures is the way they have been made invisible for over a century, yet remain two of the most important people contributing to our knowledge in STEM.

Somerville, for instance, wrote (among other things) ON THE CONNEXION OF THE PHYSICAL SCIENCES. Published in 1834, it serves as a textbook and a review of the theories of "men of science" - like Newton, Galileo, etc. While Somerville is not seen as one of the major figures of science like Charles Darwin or William Herschel. Yet her book went into ten editions, was translated into three different languages, and — for editor John Murray — was second only to Charles Darwin's ON THE ORIGIN OF SPECIES in the number of copies sold.

Now, Somerville isn't known for inventing her own scientific theories. She's mostly known for popularizing the theories of other people (mostly men). Her first publication was a set of annotations for a translation of Pierre-Simon Laplace's MECHANISM OF THE HEAVENS. Ironically, Ada Lovelace's only major publication was also a set of annotations for a translation of a work by Luigi Menabrea on Charles Darwin's analytical engine.

In both cases, I think it is interesting to see how 19th/20th century theories of authorship effectively obscured how women contributed to (and in Somerville and Lovelace's example), actually created the technological and scientific world of the 20th century. Somerville has a take on Newton that wards off his the "occult forces" of his theory of gravity for a more materialist understanding that actually foreshadows Einstein's theory of general relativity. Lovelace, of course, creates the first published computer program as this "aside" buried in one of the notes to Menabrea's essay. Even in print culture, there are so many places that we can't see, where women have been silenced.

So, what does it mean to say (as I do) that Lovelace and Somerville should be celebrated more in the history of STEM? In this context, I'm finding the questioning of traditional theories of agency and authorship by new materialist feminists to be productive. For instance, Peta Hinton and Iris van der Tuin suggest that we look at feminism not as a homogeneous movement where 'progress' is understood in a linear history punctuated by figures who acted individually as agents of change, but instead by acknowledging all of the ways women are embedded in these assemblages of power and infrastructure. There are methods of exclusion embedded in the process of understanding these assemblages as well (who is a woman? who is a feminist? what constitutes progress? who was a programmer? where is care-work to be situated?). But these questions of exclusion extend to every intervention made in the name of feminism, including the writing of these very comments. The way we describe feminism, as well as the way algorithmic 'baking' that Kathi mentions above - all of these delineate what we can see when we look for feminism.

Indeed we should ask "who is writing these algorithms?" But that question also opens up this broader question about what it is we expect when we make an intervention. How do we understand the agency of feminism and what would constitute progress in these questions? Particularly when we are talking about countless numbers of texts, the way we situate the question of gender and programming seems really important.

@KIBerens I just tried "woman skier", and the results were not much better. Yikes! However, "woman skier FIS" (Fédération Internationale de Ski) returned what I was looking for. This says that precise searching is helpful in sexist systems, a strategy that is inline with Howard Rheingold's sugestions in Net Smart.

But I agree with the larger goal you suggest -- that intervention and advocacy is needed to reform such systems!

Advocacy should start within the box before without because knowing who they are and their strength will go a long way than fighting for them.

@JudyMalloy your mention of trying the result for yourself makes me think about how these results are customized based on not only algorithms we can't see, but algorithms parsing our data bodies that we can't see or edit. Yours and @KIBerens and mine might well look totally different--but probably will still have the same fundamental assumptions.

However, the suggestion of search precision as a workaround also makes me think of the work one of our colleagues this week, Safiya Noble, has done about what the default search looks like (in her case, for terms like "black girls") and how that in some ways constitutes the social meaning of a term for somebody like a tween looking for information about herself. How do we intervene for the least experienced users as well?

@melstanfill As you point out, results of searches are increasingly algorithmically tied to past searches as well as to the data the search engine has stored about you, including assumptions about your gender or race.

There are academic issues with search results that are influenced by personal search history or personal information. Safiya Noble's work sounds important. When available, it would be great to see this!

Per @rogerwhitson on authorship, which I'm quoting below:

In both cases, I think it is interesting to see how 19th/20th century theories of authorship effectively obscured how women contributed to (and in Somerville and Lovelace's example), actually created the technological and scientific world of the 20th century. Somerville has a take on Newton that wards off his the "occult forces" of his theory of gravity for a more materialist understanding that actually foreshadows Einstein's theory of general relativity. Lovelace, of course, creates the first published computer program as this "aside" buried in one of the notes to Menabrea's essay. Even in print culture, there are so many places that we can't see, where women have been silenced.

From working in art history, I'm curious about the parallels of authorial roles given to the critic, or public intellectual, of which Somerville in particular seems to have been involved in. Yes, as you point out, there are various ways in which women's contributions to the history of science have been silenced. I'm curious, why in the 19th-century, Somerville would have been able to contribute in one role (as a critic, historian, public intellectual, etc) while her other contributions wen't unseen. So, while one route was silenced, perhaps another productive route to your research might be through understanding their historical contributions may be through the quite-small spaces in which they could speak?

I'm excited to see how this discussion and @rogerwhitson's research develops.

Hi Kathi,

I've wanted to respond to your post in this thread since the weekend. Thought I'd wait until the official start of the working group today.

From @KIBerens:

I'm interested in honing in on your suggestion of interventions in both code and output. Both are highly interrelated, of course, and are difficult to place oneself at one end or another. I'm curious about how we can place ourselves, and our interventions into this system of inputs and outputs, and between and among the two. Yet so much of the algorithmic "iceberg" remains a black box -- we don't have access to the code, we have few means to provide feedback on the code itself. Thanks, Google.

Another link for those interested in this thread, is to take a look at Google's current "Autocomplete Policies." I'm going to include a screenshot below because who knows how long these policies will remain active and unchanged.

It's easy to see that these four categories exclude much in the way of everyday forms of hateful speech. From a standpoint of "code_and_output," though, I'm attuned to the issue the public feedback mechanisms in place. It's up to an individual to report autocomplete violations: any feedback we input to Google only occurs at the level of algorithmic output. We're not sure if our input will be taken seriously, who reads our input, or if, indeed, any changes to be made will affect an algorithm as a whole, or just "my" personal algorithm.

Kir, it is a nice move but the graphic of violation against woman gender autocomplete policies should not be removed totally in the google algorithm. The reason is that, it helps to get statistics of the violence done against women in certain geographical area. For example, in Nigeria, government is not doing well in protecting us. Google autocomplete works only when the virtual community of an area searches tt engaged discussion on the net, when somebody wants to use google search within that week, you will see the autocomplete popup suggesting for the researcher. This is my observation

I am encountering a similar issues in terms of searching for "images of black beauty." This search brings up only images of horses (black horses). However if I search for "images of beauty," I get images of white women. So in the coding, beauty and race are not linked in terms of non-white women. I'm new to this discussion.

Fem-Tech net co-founder Alexandra Juhasz has done work on gender, sexuality, and race in the YouTube search algorithm and how the results it returns based on its programming might obscure or reveal parts of what she has called "NicheTube" (parts of YouTube occupied by powerful communities of potential counter publics). When looking for videos returned by the terms "angry professor" and "angry student" while researching _The War on Learning _ it was hard not to see racist or sexist agendas advanced by what was supposedly a neutral operation. Juhasz also talks about how the YouTube algorithm defines "popularity" in ways not always driven by obvious metrics like the number of views. I'm interested in how some ranking algorithms -- like the Facebook feed -- seem to be borrowing from slot machine scrambling techniques, perhaps to keep people online longer.

Totally agree that the "autocomplete policies should not be removed totally"; didn't mean to imply their removal.

For the rest of the thread participants, do you have any resources or links on how autocomplete has been used to help get statistics of violence against women? I'm intrigued, but not familiar with the method.

For sure this is true. Similar to the Netflix recommendation algorithm, part of what YouTube (and other content markets) are doing is profiling your interest in certain types of content. It's easy to default to

most popular = most number of views/hitsbut in many cases may be something closer to

The queries used will most often change on each page load and it's easy to forget that that companies are A/B testing constantly (I always explain A/B tests like optometrists asking "better like this or better like this, but without a user's permission or knowledge").

There's been a move among programmers (at least in my sphere) to switch from calling them "ranking algorithms" to "engagement algorithms." I think this makes it way clearer that systems are not always ranking content items by the same criteria, but are instead shuffling them like a deck of cards at each engagement. The gambling industry has taught us a lot about the value of unpredictable rewards in sustaining longer-term sessions and repeat visits.

Kir, there is a graph technology on Google called N-grams. You can use it to find out the most frequent words used in the virtual world and the most correct and popular expression s. It plots as a graph for you to see it.

I just wanted to suggest that while these exceptions are fascinating and worth concern, we should also looking beyond the particular implementation of autocomplete, even with Google's interesting variations on it. When wondering why these results appear, there is the entire ecology of YouTube, the Web, and society to consider.

What types of videos are people encouraged to upload, algorithmically and otherwise, in order to get more subscriptions, views, and revenue? We just read about the case of a guy looking through a forest in Japan for a dead body so that he could get more attention on YouTube.

Why does an "autocomplete" feature need to be included at all? When I start to type my CCSWG comment, I do not get any suggestions about how to complete it, although it would of course be possible for the site to provide such suggestions. Why do I need any advice, or algorithmic advice, about what I'm looking for?

Not a new question, but why do we have a marked gender or "second sex" so that "woman skiing" means something different than "skiier" in the society YouTube represents, or "woman driver" means something different than "driver"?

To shift to a different aspect of YouTube, its structure of categories: Many of the YouTube videos I view, and share, are recordings of poetry readings, but what category do these go into?

"Comedy"? "Entertainment"? In the case of skiing, "Sports" is relevant, but maybe the list of categories is part of what encourages people to upload light and derisive video fare, including that which pokes fun at women?

I also wonder: If CCSWG videos are uploaded to YouTube, what category would they be placed in?

I notice also in the YouTube autocomplete. As one just type "Violence" the first autocomplete compound words that will popup:

The algorithm of the autocomplete favours women for it helps in combating violence against women and girls. See the reason I am saying that we not totally remove violent words for they help us to take the statistics of the violence done against women.

@lizlosh writes:

That's interesting. It probably works something like the aleatory marginalia phrases in the "Prologue" to From Ireland with Letters. If you go to any page -- https://people.well.com/user/jmalloy/from_Ireland/prologue_liam8.html -- for instance and press "alter the marginalia" (on the bottom left of the page) you'll see how the margnalia links are shuffled. (in this case it is not precisely a shuffle, since repetition is allowed) Actually this works pretty well for allowing access to different parts of the narrative, but on a commercial platform. where control of content is not up to the user, there are some issues...

For the sake of comparison:

Quite a difference. There's a mastery of the sport implied and, following a few of the top links, confirmed in the library of Olympic highlights and forecasts provided.

Meanwhile, "women skiing crash" turns up images of prone female Olympians...

Oh the ethics of that are fascinating: people believe they're ranking algorithms/the best results (at least in search, but possibly also elsewhere). This again raises the question I had above--we may know these things are designed to optimize advertising eyeballs, but the average user doesn't. In some ways it's a literacy question for people, but it's also a responsibility question for platforms.

-- you might want to look for Safiya Noble's forthcoming (like in two weeks) book Algorithms of Oppression, which talks about exactly the kinds of bias you and the group are discussing here. She's also got several smaller pieces out that can be a good place to start.

This is a great point, Nick. While the algorithmic bias is an important topic, in focusing on the idea of a single algorithm (not the case) we can create the false impression that it's just a small piece of code that needs to be modified. When it's actually the case that there's a full stack of design, programming, and interface that needs to be considered. Search term results are often "optimized" based on location, user demographics, user behavior and related 'similar user' behaviors -- we can't assume that what pops up on my screen is the return that everyone gets. In fact, I can't even be sure that on a laptop what returns is stable from location/time to location time.

I really love your question - "why do I need any algorithmic advice?" -- we might want to make that a constant thread through our discussions over the next three weeks.

A large majority of the stack/code/design/interfaces have been and continue to be developed inside of and in support of violent and oppressive neo colonialism.

STEM programs and coding camps are by and large neo liberal recruiting tools that seek to unhinge participants from the commons as well as from historical and culture realities.

Code that does not seek to bring about new equitable political and economic space for the oppressed is code that is in service to the status quo.

But you almost certainly are getting suggestions about how to complete your comment, in one way or another—your linguistic behavior on here is partially dependent on the particular affordances of the text box itself ("You can use Markdown in your post"), the structure of the forum software (e.g., replies are flattened, not threaded), the features provided by underlying operating system (underlining misspelled words, silently correcting spelling and punctuation), the physical interface itself (the QWERTY layout of your keys).

If you're typing on a smart phone (w/o a physical keyboard) then this is even more aggressive and more closely related to search engine autocomplete: as I understand it most keyboard software is more or less guessing what word you intended based on a statistical model mapping your gestures to character/word likelihood. (And of course iOS offers the famed QuickType suggestions that have been deployed to great poetic effect.)

In other words I don't think it's possible to avoid "algorithmic advice" (broadly construed) when writing on a computer—there's always someone else's procedure or data that shapes the way you convert your intention into text. (a point that works I think in the service of your larger point, I think, which is that it's worth considering search autocomplete as just one element in the larger ecology.)

I also want to consider the idea that autocomplete is, fundamentally, a kind of assistive technology: it makes it easier for people to type, which is ultimately a worthy goal, I think. So the question isn't how to minimize algorithmic intervention but how to make it more transparent and expressive.

In addition, because recommendation engines—including Google's search suggestions—work by identifying cohorts of people and common search patterns, unless coders modify the algorithms to include counter-suggestions that promote things like equity, diversity, etc., then the suggestions will just re-inscribe society's prejudices and power structures. In other words, we need activist algorithms.

Thanks @KIBerens for framing this thought-provoking example.

Allison's important point about assistive technology inspires me to think about the ways algorithmic interventions can work like "an intervention" -- a watchfulness or mindfulness for another that is expressed as care. (Although note that in these cases one person's care may be another person's surveillance.) This is interesting in indirect cases such as the ones @Waliya suggests, where communities could be better served if we had more information on which violent / misogynist searches occur in which communities. It is also interesting in more direct cases, such as suicide risk analysis, when algorithms are attempting to predict the next thought or action of a person seeking help based on what they have written so far. From the linked feature:

Another thing I noticed in the GOOGLE algorithm, it is configured regionally to serve the desire of the people and the happenning data inputs into research engine. I want some to type violence there n your country so that we will confirm this assertion.

Thanks Kir!

The interesting thing about these distinctions (public scholar, critic, etc) is that they were very much in flux when Somerville was writing, so it is difficult to reconstruct what they meant to her. She was inducted as one of the two "honorary" female members of the Royal Society with Catherine Herschel. But, the membership was "honorary" not full, and she shared it. So.

But yes, thank you for the suggestion about "small" spaces of intervention. That's very important and another place I have to look.

Allison, you're right of course that any interface models and facilitates a particular type of input, and that this can be assistive, or in some cases not. (In this interface I get a squiggly red line indicating that "assistive" isn't a properly spelled English word.) In the case of a YouTube search interface, I want to suggest that the interface typically helps people regress toward the mean of stereotypes and find the sort of spectacles that make YouTube what it is.

There is probably no way to avoid algorithmic (or designed) advice, as you say, but we could use for some of it to be, as mwidner and jeremy have noted, thoughtfully constructed to be activist and interventionist.

I am using Fero Smartphone. I am also multilingual(French, English, Hausa, Margi and Spanish (Debutante)). I did notice that #autocomplete algorithm# does not suggest correctly for all languages. For example, If I am typing French in English configured mobile, the autocomplete malfunctions.

Thank you for this point! Autocomplete is part of a broader swath of interventions to make things easier that seem obviously superfluous and mockable to those who don't need them but actually serve a purpose for people with different abilities--whether physical (as in so many As Seen on TV products actually being for folks with disabilities) or technological (the young girl of color who has no idea that "black girls" is going to bring up nothing but porn).

Construed more widely, we could perhaps think of this at an epistemic level, even — what is not normative within an episteme tends to become illegible, and within apparatuses of knowledge production that rely on or leverage such things as "scale", this effect will tend to become more pronounced... So, there's a push towards normativity or standardization even in a purely epistemic sense, that is, even without any other incentivization.

I wrote something about this last year — here is the link in case it is of any interest to anyone: http://bit.ly/2ro58Vw .

This is now also making me think about when (mere) affordances end up becoming regulative. I like the term "assistivity" that was used in this thread. I am finding the term very useful to think about this. The threshold for that would seem to be different in different situations or technological environments (e.g. as noted in this thread, phones tend to have a stronger version of autocomplete) — the more tightly constrained the technology, the more the need for assistivity, and the more, correspondingly, the risk of assistivity turning into regulation.

I too am finding the term "assistivity" that has been discussed. I can't help thinking about assistivity as regulation, as discussed by @sayanb in terms of assistive technologies performing a type of work or labor in our place. Assistive technologies provide narrower options as they are more and more limited thus giving us the freedom to not be paralyzed by information, performing a type of administrative work as affective labor.

Further, I can't think of these forms of work (regulatory, administrative, affective) as quite gendered, both historically (the secretary, the switchboard operator, the flight attendant) but also contemporarily in the form of automated voice. Automated voice assistants (Siri, Cortana, Alexa....) and the cold disembodied public service voices used to regulate and instruct (pre siri GPS, flight cockpit voices, public transportation system voices) largely employ female voices over male. Clifford Nass, Stanford University professor, suggests that female voices were first used as instruction in flight cockpits in WWII because the higher pitch stood out from the male voices of the pilot and crew. A female voice is preferred by Apple because it is believed to be perceived as friendlier and more personal to its automated male counterpart. But perhaps there is something more to this relationship between automated assistant and gendered work? Obviously, the female voice would not have stood out quite so much in the WWII cockpit if there had been real women involved in the first place.

https://www.cnn.com/2011/10/21/tech/innovation/female-computer-voices/index.html

@KIBerens writes to introduce this important thread:

Our own role in determining how our own websites are listed by search engines could be looked at. Sadly, as people with websites, our role in Search Engine Optimization is not as great as it used to be. But there are a few things we can do, and this is important for all of us who work with gender and race studies.

Because, search engines change algorithms and tailor them to users, advice on this issue is difficult. But here is a relatively useful resource from a nonprofit marketing resource:

"How to optimize a new nonprofit site for SEO"