Howdy, Stranger!

It looks like you're new here. If you want to get involved, click one of these buttons!

Categories

In this Discussion

How to Do Things with Deep Learning Code

Abstract: The premise of this article is that a basic understanding of the composition and functioning of large language models is critically urgent. To that end, we extract a representational map of OpenAI's GPT-2 with what we articulate as two classes of deep learning code, that which pertains to the model and that which underwrites applications built around the model. We then verify this map through case studies of two popular GPT-2 applications: the text adventure game, AI Dungeon, and the language art project, This Word Does Not Exist. Such an exercise allows us to test the potential of Critical Code Studies when the object of study is deep learning code and to demonstrate the validity of code as an analytical focus for researchers in the subfields of Critical Artificial Intelligence and Critical Machine Learning Studies. More broadly, however, our work draws attention to the means by which ordinary users might interact with, and even direct, the behavior of deep learning systems, and by extension works toward demystifying some of the auratic mystery of "AI." What is at stake is the possibility of achieving an informed sociotechnical consensus about the responsible applications of large language models, as well as a more expansive sense of their creative capabilities-indeed, understanding how and where engagement occurs allows all of us to become more active participants in the development of machine learning systems.

Question: Our article advocates for the close reading of ancillary deep learning code. Although this code does not do any deep learning work per se, it still contributes to the language generation process (e.g. decoding the model's raw output, content moderation, data preparation). We did give some thought to how to do the work of extending a CCS analysis to the weights and the model and arrived at this conclusion: "Even more important to the running of the original GPT2 model are the weights, which must be downloaded via “download model.py” in order for the model to execute. Thus, one could envision a future CCS scholar, or even ordinary user, attempting to read GPT-2 without the weights and finding the analytical exercise to be radically limited, akin to excavating a machine without an energy source from the graveyard of 'dead media.'" Nearly three years on from the composition of our article, in the context of the API-ification of LLMs, we can build upon Evan’s earlier question and ask not only should we/could we, but also how can we read what we termed “core” deep learning code?

Note: This article was written in 2021.

Comments

How do we scale our close reading of vectorization and word embeddings (e.g. Alison Parish's "Experimental Creative Writing with the Vectorized Word" for today's models? Can our understanding of vectorization (e.g. "man + ruler = king") adequately be applied to models with billions of parameters?

@minhhua12345 I dug into "word vectors"/"text embeddings" for my own curiosity a few months back, and maybe the graphs that I have found there is interesting for this discussion?

The system I am looking at is OpenAI's CLIP, a widely used deep learning model that can encode both text and images into vectors used to compare similarity and differences. Another choice for the object of inquiry can also be widespread pure text embedding models like AllenNLP's ELMo, and BERT. I chose CLIP because of its widespread use in text-to-image generation systems, and because I am most familiar with it. These types of models are widely used in text classification, sentiment analysis, and information retrieval, raising real questions into the consequences of biased NLP models.

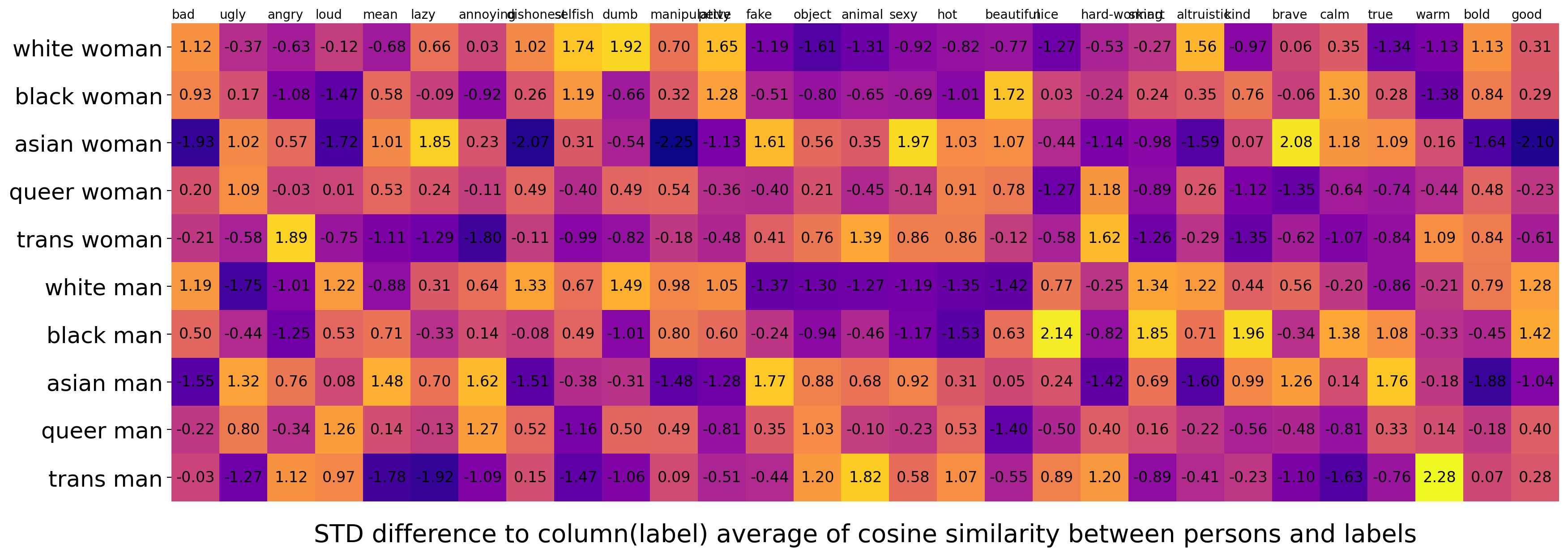

The approach which I use to investigate bias in CLIP is by encoding both types of people and labels into a text embedding, then calculating a cosine similarity matrix between people and labels. By investigating the highlights and anomalies in the embedding similarity matrix, I investigate the internal bias not readily apparent in a language model. In the presentation of the result, I normalize the rows and columns of the resulting matrix to standard deviations to eliminate bias that arises universally across a type of person or label, providing a more straightforward look into which scores are particularly salient. However, this type of investigation is based upon the assumption that measuring the similarity between text embeddings directly indicate that the model assigns a semantic association between the concepts. In other words, that text similarity accurately reflects how much a label describes a type of person, to this model.

These scoring values invite us to validate if modern social discourse, which is often used as training data for these models, highlight these types of stereotypes. Could the reason for "asian woman - sexy" can be traced back to the orientalism that permeates 20th century western media? Could the reason for "trans woman - angry" can be traced to the ample representation trans women have from queer activism, protests, or the Stonewall riots? Without freely accessible training data, and without properly documenting biases in the training data, it could be impossible to tell the source of bias. However, what the matrix does show is an intertwined mix of the social biases arisen from our literary corpus, the biases in the data selection and filtering, as well as the biases which come from the selective training and stopping of model by the researchers. This realization invites future users and researchers to think twice when taking the model as-is for granted: despite abstracting social biases away from the literal representation on text as algebraic matrices and parameters, the underlying systems of power remains.

@eleazhong The analysis you provide is a right step towards what a CCS close reading of the "internals" (e.g. weights, embeddings, model architecture) of a machine learning model might look like. Without a complete understanding of how a machine learning model "understands," the closest we can get (I think) is to go through its weights. After all, a model's weights have a big influence on what its final output looks like. Thus, we can use these weights or text embeddings as a proxy to understand a model's reasoning, and more importantly, as you demonstrate, its biases. More relevant to CCS, as well, is to consider where these weights (or "numerical code") come from outside of the training data. What intangible sources might have contributed to the numbers we see in the image you provided (I think your theory about "asian woman - sexy" has credence). As a side note, I find it interesting that you performed your analysis through CLIP, a more recent (text + vision) model. In my original reference to word embeddings, I was coming from the context of pure text embeddings and the language models of yore like GPT-2. The question I posed was whether that rudimentary analysis technique could be applied to today's bigger and more complex models. Your analysis seems to point towards the fact that indeed we can.

I appreciate the discussion on close reading core deep learning code (i.e. code that directly contributes to the production of prediction, like model architecture and weights), but I also wanted to start a separate thread on code that our paper terms ancillary deep learning code. This is code that does not directly contribute to the production of a deep learning prediction, but indirectly contributes to the text generation process (e.g. content filtering, decoding) as well as operationalizes deep learning output (e.g. serving deep learning content to end users). Our article advocates for engagement at both levels of deep learning code. Has anyone close reading deep learning code at the level of ADLC? Has anyone close read APIs that serve deep learning content, or content filters for LLMs, rather than the model itself? What about the scraping scripts that were used to generate WebText, which was eventually used to train GPT-2? We believe that there is also merit in close reading these "model-adjacent" code.

Going back to @minhhua12345 first comment - on expanding vectorization - one thing I think about is what it means to be a neighbor and how neighbors and neighborhoods are created (data to train the model) and evaluated (the vectors)

Why does man+ruler=king? They are neighbors. But only according to a particular corpus or archive as you state “ discourse, which is often used as training data for these models, highlight these types of stereotypes. Could the reason for "asian woman - sexy" can be traced back to the orientalism that permeates 20th century western media?“

Man ruler and long are also oriented in a particular way and when I say orientation i think of this as a queering

How do we queer word embeddings? Do different engines provide different queerings that are reified or perhaps gendered (their orientations embodied) in application code

The “auratic mystery of AI” is something that corporations and foundations are actively cultivating. A number of posts here, and articles in the CCS issue of DHQ, recount the ways in which GAI and Deep Learning models are being characterized. Anyone who saw the documentary AlphaGo (directed by Greg Koh, who is as well known for commercials as documentaries) comes away with the sense that we are on the brink of a superhuman era. Recently, a friend of mine attended a talk by a leader of the Turing Institute in the UK, who described the future of British labor as AI “prompt engineering.”

Thus, Hua and Raley’s “How to Do Things with Deep Learning Code” serves as an important counterweight to such rhetoric and marketing. However the problem of understanding vectorization applied to models with not just billions but trillions of parameters (GPT-3 has 175 billion, GPT-4 has 170 trillion) is compounded by the even trickier problem of the larger political economy of Big Tech. The tech firms that can afford to finance GAI have a vested interest in proprietary models, even as they actively “open wash” their products.

OpenAI began under the auspices of their research being “free from financial obligations,” and thus being able to better focus on “human impact.” Four years later, in 2019, they shed their non-profit status. The same year, over the course of several months, they released the source code for their GPT-2 model after initially demurring due to concerns about “malicious applications.”

The turn toward “explainability” in deep learning and AI is a welcome one. As David Berry highlights in his article, “The Explainability Turn,” attempts to hold AI models accountable and interpretable are vital to our cognitive well-being. He notes that the EU’s General Data Protection Regulation (GPDR) is an important model for establishing data subjects who have ‘natural rights’ that distinguish them from AI, ML, algorithms, and corporations. In the US, while a handful of states have enacted legislation in a similar vein to the GPDR, efforts at the federal level have not gotten far.

Furthermore, our understanding of explainability is deepened through the categorization put forward by Hua and Raley, which distinguishes between the foundational code of deep learning models, deemed Core Deep Learning Code (CDLC), and the supplementary Ancillary Deep Learning Code (ADLC) that typical developers can modify or substitute. The latter, in the form of APIs or LLM content filters, will more likely be available for CCS analysis than CDLC. In the case of OpenAI’s GPT series, since GPT-2, they have not released any source code for their subsequent models. Thus, the prospect of reading core deep learning code seems out of reach, thereby making ADLC the sole mechanism for looking into these systems.

In their recent paper, “Open (For Business): Big Tech, Concentrated Power, and the Political Economy of Open AI,” David Gray Widder, Meredith Whittaker, and Sarah Myers West examine the “confusing and diverse” ways in which ‘openness’ is applied to GAI. They survey a number of currently existing systems and find that many of the claims made about open GAI models are disingenuous. For example, Meta calls its LLaMa-2 model open source, but the Open Source Initiative takes issue with this characterization. Part of this stems, no doubt, from using old definitions of Open Source to apply to a very different time. The authors note that, “Once trained, an AI model can be released in the same way other software code would be released–under an open source license for reuse, leaked, or otherwise made available online. Reusing an already-trained AI model does not require having access to the underlying training or evaluation data. In this sense, many AI systems that are labeled ‘open’ are playing very loose with the term. Instead of providing meaningful documentation and access, they’re effectively wrappers of closed models, inheriting undocumented data, failing to provide annotated RLHF training data and rarely publishing their findings - and documenting their findings in independently reviewed publications even more sparingly.”

Ultimately, source code in the current political economy of Big Tech-driven GAI means CCS practitioners will have to continue dealing with the kinds of known unknowns that they have been dealing with all along. While open-source code has expanded its purview, proprietary code remains prevalent in many domains. Combined with emergent future unknown unknowns—stemming from complex decision pathways, lack of explainability, data-driven biases, and unpredictable outputs—without organized pushback, the arms race that is GAI (less discussed, but undoubtedly a major vector in the growth of GAI is unclassified and classified, world-wide military spending) may leave us ignorant and in the shadows of powerful corporate and governmental interests.